Aug 30 2016

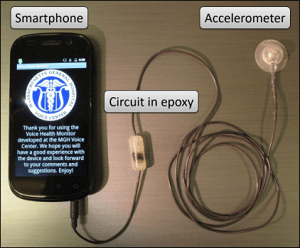

Accelerometers capture data about the motions of patients' vocal folds to determine if their vocal behavior was normal or abnormal. Credit: Daryush Mehta/MGH

Accelerometers capture data about the motions of patients' vocal folds to determine if their vocal behavior was normal or abnormal. Credit: Daryush Mehta/MGH

Speech, the most basic human instinct, can be challenging for many people. One in 14 working-age Americans experience voice related difficulties, mostly linked with abnormal vocal behaviors.

Some of these disorders can also damage the vocal cord tissue, resulting in the formation of polyps or nodules that hamper with the production of regular speech.

Several behaviorally-based voice disorders are still not understood. To be more specific, patients with muscle tension dysphonia (MTD) often experience a weakening feeling in their voice quality together with vocal fatigue, also known as tired voice, in the absence of evident vocal cord damage or various other medical issues, which makes it difficult to diagnose and treat people.

A team from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) and Massachusetts General Hospital (MGH) hopes that machine learning will help to improve the understanding of conditions like MTD.

Researchers used accelerometer data gathered from a wearable device, created by researchers at the MGH Voice Center in order to illustrate the possibility of detecting differences between subjects with MTD and matched controls.

The same techniques also revealed that MTD subjects displayed behavior that was similar to that of the controls after going through a voice therapy.

We believe this approach could help detect disorders that are exacerbated by vocal misuse, and help to empirically measure the impact of voice therapy. Our long-term goal is for such a system to be used to alert patients when they are using their voices in ways that could lead to problem.

Marzyeh Ghassemi, Ph.D. Student, MIT

The paper’s co-authors include John Guttag, MIT professor of electrical engineering and computer science; Zeeshan Syed, CEO of the machine-learning startup Health[at]Scale; and physicians Robert Hillman, Daryush Mehta and Jarrad H. Van Stan of Massachusetts General Hospital.

How it Works

Current methods to apply machine learning to physiological signals frequently entail supervised learning, which would require researchers to meticulously label data and provide desired outputs.

In addition to being time-consuming, these approaches at present cannot really help categorize utterances as normal or abnormal, because there is no proper understanding of the connection between accelerometer data and voice misuse.

Since the CSAIL team was not aware when vocal misuse was occurring, they chose to use unsupervised learning, where data is not labeled at the instance level.

People with vocal disorders aren’t always misusing their voices, and people without disorders also occasionally misuse their voices. The difficult task here was to build a learning algorithm that can determine what sort of vocal cord movements are prominent in subjects with a disorder.

Marzyeh Ghassemi, Ph.D. Student, MIT

The research was split into two groups: patients who had been diagnosed with voice disorders, and a control group of people without disorders. Wearing accelerometers on their necks, each group carried on with their daily routines. The accelerometers captured the motions of their vocal folds.

Researchers then studied both groups’ data, and analyzed over 110 million “glottal pulses” that signify one opening and closing of the vocal folds. The team could identify major differences between patients and controls by comparing clusters of pulses.

The team also discovered that post voice therapy, the distribution of patients’ glottal pulses resembled those of the controls to a greater extent. According to Guttag, this is the first study to apply machine learning to supply objective confirmation of the positive effects of voice therapy.

When a patient comes in for therapy, you might only be able to analyze their voice for 20 or 30 minutes to see what they’re doing incorrectly and have them practice better techniques. As soon as they leave, we don’t really know how well they’re doing, and so it’s exciting to think that we could eventually give patients wearable devices that use round-the-clock data to provide more immediate feedback.

Susan Thibeault, Professor, University of Wisconsin School of Medicine and Public Health

Looking Ahead

The study aims to use the data to enhance the lives of individuals suffering from voice disorders and to potentially help the diagnosis of particular disorders.

The researchers also plan to explore the fundamental reason why specific vocal pulses often occur in patients than in controls.

Ultimately we hope this work will lead to smartphone-based biofeedback. That sort of technology can help with the most challenging aspect of voice therapy: getting patients to actually employ the healthier vocal behaviors that they learned in therapy in their everyday lives.

Robert Hillman, Physician, Massachusetts General Hospital

The research received partial financial support from the Intel Science and Technology Center for Big Data, the Voice Health Institute, the National Institutes of Health (NIH) National Institute on Deafness and Other Communications Disorders, and the National Library of Medicine Biomedical Informatics Research Training.