An estimated 80% of the ocean is still unexplored, but our current methods for exploring the ocean are often expensive, such as with ship-based observations, or limited in capability, as with buoys.

Instead, we are envisioning swarms of smart underwater robots that can navigate on their own, save energy by exploiting ocean currents, find optimal locations to gather data about the ocean and potentially follow and track marine life.

This research aims to show how Reinforcement Learning could equip robots with the intelligence needed to accomplish these tasks on their own. With global exploration and sampling of the ocean, we may be able to gain a better understanding of the physics of the ocean and climate change.

What inspired your research into developing artificial intelligence (AI) that will enable autonomous drones to use ocean currents to aid their navigation?

My advisor, Professor John Dabiri, has been working towards the goal of widespread ocean exploration by developing several technologies such as biohybrid jellyfish robots and seawater batteries. This research was a collaboration between us and Professor Petros Koumoutsakos, Guido Novati and Ioannis Mandralis.

The need for better ocean exploration technologies combined with the new, efficient Deep Reinforcement Learning algorithm called V-RACER developed by Professor Koumoutsakos and Guido Novati made this research possible.

How does this technology overcome the challenges associated with deep ocean exploration, such as data feeding and navigation issues?

It is virtually impossible to communicate with robots in the deep ocean from the surface without a physical tether. For the large-scale exploration of the ocean, this means that swarms of robots are on their own – it is not practical to send each robot information about incoming ocean currents and obstacles or help with directions through challenging waters.

To fix this problem, our Reinforcement Learning approach aims to make the robots smarter so they can decide on their own how to navigate efficiently through ocean currents. This autonomous approach may also open up new possibilities for ocean research, such as automatically tracking animals and bio-markers or discovering data-rich locations in the ocean for observation.

Can you give an overview of how reinforcement learning (RL) coupled with an on-board velocity sensor may be an effective tool for robot navigation?

Deep RL works by feeding information from on-board sensors into a deep neural network, which tells the robot what action to take. Then, this network is trained by looking at past experiences and adjusting the network to give better results.

For example, the neural network might learn how to find an ocean current to push the robot towards its destination. In our recent paper, we found that a simulated robot that can sense the surrounding water speed and direction can learn how to exploit currents and navigate effectively using Deep RL.

How do RL networks compare to conventional neural networks?

Machine learning often involves training on large data sets such as images or videos, which requires high-performance computers and neural networks with millions of neurons. In Deep RL, however, the neural networks are comparatively tiny since they often process data from a handful of sensors instead of millions of pixels from an image.

For this reason, we have been able to demonstrate Deep Reinforcement Learning on extremely small processors, using only five percent as much power as it takes to charge a typical phone.

How did you test the AI’s performance?

We tested the AI in simulated fluid flow and observed how often it reached a target destination while being pushed by strong currents. We limited the swimming speed; strategies such as always swimming towards the target only succeed 2% of the time.

In contrast, our AI approach learned how to navigate with a 99% success rate when using velocity sensors and around 50% when sensing other quantities such as vorticity.

Additionally, we measured how quickly our simulated robot could reach the target, which is important for real robots with limited battery capacities. We were excited to see the AI swim comparably fast to the optimal route through the fluid, despite only possessing knowledge of its immediate surroundings.

In your research, you mention that you hope to create an autonomous system to monitor the condition of the planet’s oceans. Do you think that this AI could be used to explore oceans on other worlds, such as Enceladus or Europa?

A strength of RL is its ability to adapt and learn new environments, which is promising for deployment in unknown environments, such as unexplored areas of our oceans or the subsurface oceans on Enceladus and Europa.

However, since RL learns using past experiences, we found that experiences in one flow field may not necessarily translate to a new one. So if the robot is transferred to a new environment, it may adapt to its new surroundings, but it will have to start learning essentially from scratch.

For this reason, we are working on baking-in fluid mechanics and optimal control into the AI to give it a head start in the learning process. If we can make these improvements, it will go a long way to show that RL could be deployed effectively throughout our oceans and potentially off-world.

To be able to make their own navigation decisions, drones would need to be able to gather information about the water currents they are currently experiencing. How did you achieve this?

With Reinforcement Learning, any number of sensors could be used to sense the surrounding water currents and learn how to navigate through them. But in order to survey the ocean for months at a time, robots would ideally be equipped with as few sensors as possible to conserve power, so choosing the most effective sensors is vital.

We found that sensing the fluid velocity worked best in our simulations, so for our physical robot, our first tests will be with an accelerometer and depth sensor to infer the flow velocity.

Did you come across any challenges during your research, and if so, how did you overcome them?

As the university campus was closed due to the current COVID-19 pandemic, we had to get creative when making our physical robot for testing out the RL algorithm.

Fortunately, I was able to use a 3D printer in my apartment to manufacture the robot and then test the robot inside my bathtub. Now, we are excited to be testing the robot in our water facilities, which have significantly more room for the robot to swim around.

Initially, you were just hoping the AI could compete with the navigation strategies already found in real swimming animals. Did you encounter anything unexpectedly exciting when testing the technology?

Animals have a variety of methods for sensing and navigating through the ocean, such as how seals sense water currents with their whiskers to hunt in the dark. One recent study found evidence that zebrafish avoid obstacles by sensing the surrounding water’s vorticity, which is a measure of how much fluid is spinning.

In our research, we tested navigation based on vorticity but were surprised to see that sensing the fluid velocity gave a better result than the zebrafish-inspired approach. We were excited to see the robot navigate so effectively using this method.

What is the ‘CARL-Bot,’ and how will it be used to test the efficacy of your research?

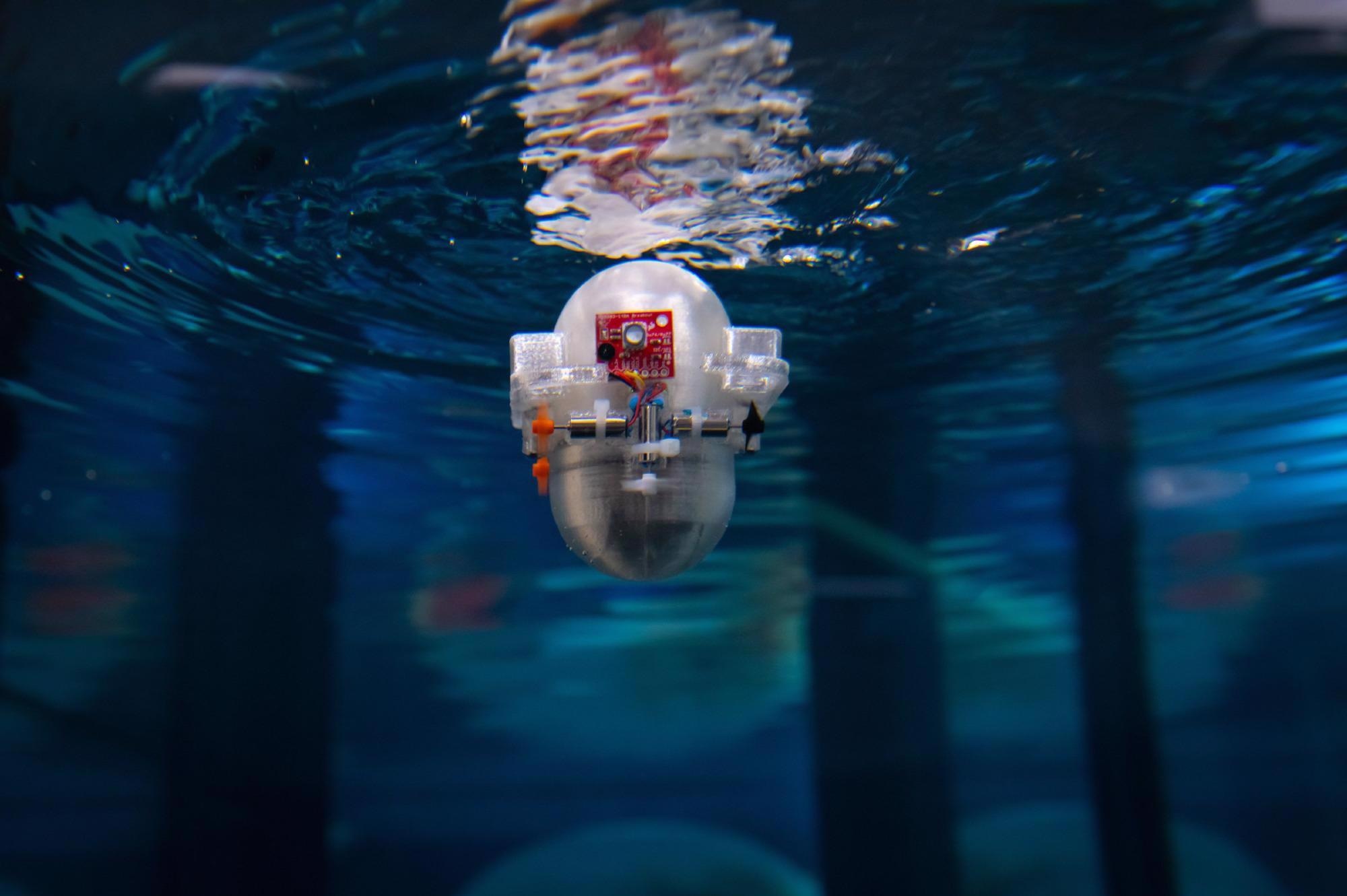

CARL-bot is the Caltech Autonomous Reinforcement Learning robot, which is a palm-sized, underwater robot for testing our RL-based navigation in the real world. It will show us how our recent results in a simulated fluid flow hold up for navigating in the real world with all of the associated challenges, such as imperfect sensors and motors, all while training on-board and in real-time.

Since most RL research is performed in simulation, we plan to use CARL-bot to investigate what types of algorithms, neural networks and sensors work best for small robots in real-world conditions.

By demonstrating RL-based navigation on CARL-bot, we aim to not only show that navigating with RL could be feasible in the real world but that it is small and efficient enough to work on small-scale robots.

Although the technology is still in its infancy, current research proves the potential effectiveness of RL networks for ocean navigation. What are the next steps for your research?

We have demonstrated that RL can work for navigating in a simulated environment, but the next step is to bring it into the real world using CARL-bot. In our lab, we have a 16-foot long water tank where we can create different fluid flows and currents to serve as a training ground for CARL.

Additionally, we have a two-story-tall water facility that can produce currents that mimic those found in the ocean, such as upwelling and downwelling. This will let us prove the effectiveness of the algorithm and robot for accomplishing diving tasks that mimic those used in essential oceanographic research.

We are also working on speeding up the learning process by incorporating knowledge of fluid mechanics and control theory in the algorithm.

About Peter Gunnarson

Peter Gunnarson is a Ph.D. student in Professor John Dabiri’s lab at Caltech. His research is focused on underwater robotics and Reinforcement Learning and is funded in part by the NSF Graduate Research Fellowship. Peter received his MS in Aeronautics at Caltech and his BS in Aerospace Engineering at the University of Virginia.

Peter Gunnarson is a Ph.D. student in Professor John Dabiri’s lab at Caltech. His research is focused on underwater robotics and Reinforcement Learning and is funded in part by the NSF Graduate Research Fellowship. Peter received his MS in Aeronautics at Caltech and his BS in Aerospace Engineering at the University of Virginia.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.