Dec 20 2018

Researchers in Russia are one step closer to developing a novel digital system to process speech in real-life sound environments, for instance, when a number of people talk at the same time during an exchange.

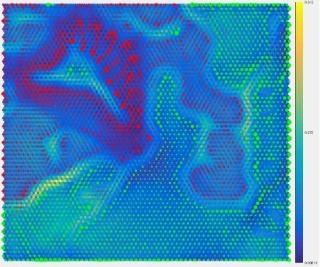

Clustering of the reaction of the auditory nerve for two phonemes on the distance matrix. (Image credit: Peter the Great St. Petersburg Polytechnic University)

Clustering of the reaction of the auditory nerve for two phonemes on the distance matrix. (Image credit: Peter the Great St. Petersburg Polytechnic University)

At Peter the Great St. Petersburg Polytechnic University (SPbPU), a Project 5-100 participant, scientists have modeled the mammalian auditory periphery to simulate the process of the sensory sounds coding. The study results have been reported in a scientific article titled “Semi-supervised Classifying of Modelled Auditory Nerve Patterns for Vowel Stimuli with Additive Noise.”

The human nervous system is capable of processing information in the form of neural responses, say the SPbPU experts. In fact, the peripheral nervous system involving analyzers (especially auditory and visual) provides insight into the external environment. This system plays a role in the initial conversion of external stimuli into the neural activity stream, which reaches the greatest levels of the central nervous system through the peripheral nerves. This mechanism allows a person to qualitatively recognize a speaker’s voice in a highly noisy environment. However, current speech processing systems are not sufficiently effective and hence need robust computational resources, say the researchers.

In order to overcome this challenge, experts at SPbPU’s Measurement and Information Technologies department performed a study, which is supported by the Russian Foundation for Basic Research. During the course of the research, the team devised techniques for acoustic signal recognition on the basis of peripheral coding. Researchers will partly reproduce the processes carried out by the nervous system while processing the data and incorporate this process into a decision-making module, which establishes the type of the incoming signal.

The main goal is to give the machine human-like hearing, to achieve the corresponding level of machine perception of acoustic signals in the real-life environment.

Anton Yakovenko, Project Lead, Peter the Great St.Petersburg Polytechnic University.

Yakovenko stated that the instances of the responses to vowel phonemes provided by the researchers’ auditory nerve model are represented by the source dataset. A unique algorithm performed data processing. It also performed structural analysis to detect the neural activity patterns used by the model to detect each phoneme. The proposed method integrates graph theory and self-organizing neural networks.

The researchers stated that analysis of the reaction of the auditory nerve fibers made it possible to accurately recognize vowel phonemes under extreme noise exposure and supersede the most standard techniques for parameterization of acoustic signals. According to the SPbPU team, the new techniques would help to produce a new range of neurocomputer interfaces and would also offer better human-machine interaction. In this context, the research holds immense potential for practical applications, for instance, separation of sound sources, in cochlear implantation (surgical restoration of hearing), recognition and computational auditory scene analysis based on the principles of machine hearing, and development of novel bioinspired techniques for speech processing.

The algorithms for processing and analysing big data implemented within the research framework are universal and can be implemented to solve the tasks that are not related to acoustic signal processing.

Anton Yakovenko, Project Lead, Peter the Great St. Petersburg Polytechnic University.

He further added that one of the proposed approaches was effectively utilized for network behavior anomaly detection (NBAD).