Jul 11 2019

Robots are ideal to carry out identical repetitive movements, for instance, a basic task on an assembly line—picking a cup, turning it over, and putting it down. However, they do not possess the ability to distinguish objects as they move through an environment. For example, a human picks up a cup, places it down in an unplanned location, and the robot should be able to retrieve it.

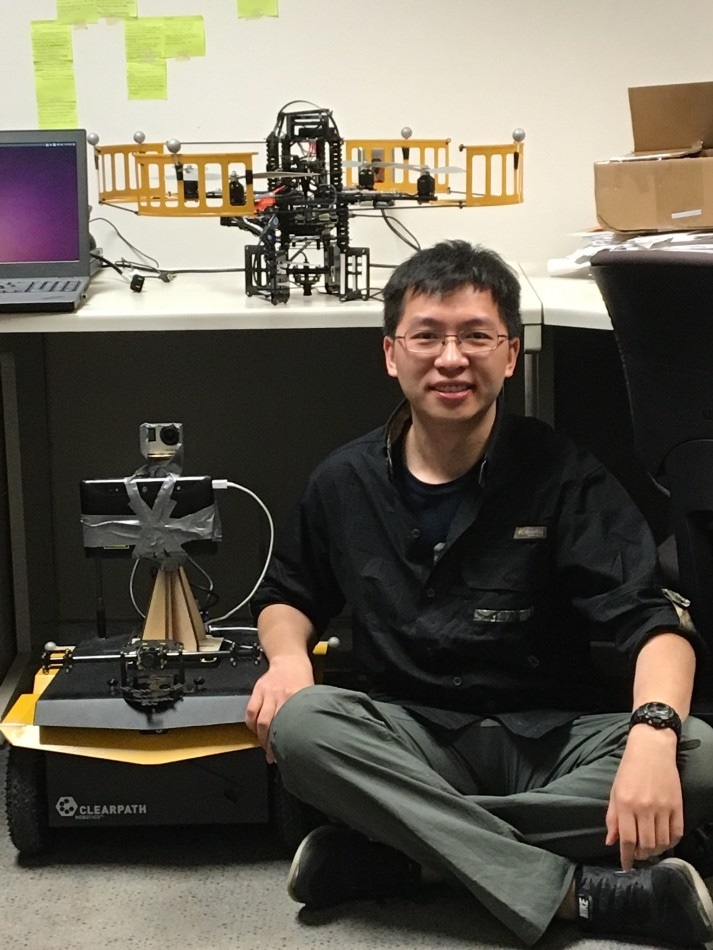

Xinke Deng. (Image credit: University of Illinois)

Xinke Deng. (Image credit: University of Illinois)

A new study was done by scientists at the University of Illinois at Urbana-Champaign, NVIDIA, the University of Washington, and Stanford University, on 6D object pose estimation to create a filter to provide robots with better spatial perception so they can control objects and steer them through space more precisely.

While 3D pose offers location data on X, Y, and Z axes—relative position of the object with regards to the camera—6D pose offers a much more comprehensive picture. “Much like describing an airplane in flight, the robot also needs to know the three dimensions of the object’s orientation—its yaw, pitch, and roll,” said Xinke Deng, doctoral student studying with Timothy Bretl, an associate professor in the Dept. of Aerospace Engineering at University of Illinois.

Furthermore, in real-world settings, all six of those dimensions are continually changing.

“We want a robot to keep tracking an object as it moves from one location to another,” Deng said.

Deng described that the research was conducted to enhance computer vision. He and his coworkers created a filter to assist robots in examining spatial data. The filter analyzes each particle, or piece of image information gathered by cameras aimed at an object to help minimize judgment errors.

In an image-based 6D pose estimation framework, a particle filter uses a lot of samples to estimate the position and orientation. Every particle is like a hypothesis, a guess about the position and orientation that we want to estimate. The particle filter uses observation to compute the value of importance of the information from the other particles. The filter eliminates the incorrect estimations.

Xinke Deng, Doctoral Student, University of Illinois

Deng continued, “Our program can estimate not just a single pose but also the uncertainty distribution of the orientation of an object. Previously, there hasn’t been a system to estimate the full distribution of the orientation of the object. This gives important uncertainty information for robot manipulation.”

The research uses 6D object pose tracking in the Rao-Blackwellized particle filtering framework, where an object’s 3D rotation and 3D translation are separated. This enables the scientists’ approach, referred to as PoseRBPF, to competently estimate the 3D translation of an object along with the total distribution over the 3D rotation. Consequently, PoseRBPF can monitor objects with random symmetries while still maintaining satisfactory posterior distributions.

“Our approach achieves state-of-the-art results on two 6D pose estimation benchmarks,” Deng said.

Deng got an M.S. in aerospace engineering from University of Illinois in 2015. He carried out the study as a part of an internship with NVIDIA. He is presently a Ph.D. candidate in the Dept. of Electrical and Computer Engineering. His adviser was Associate Professor Timothy Bretl.

PoseRBPF: A Rao-Blackwellized Particle Filter for 6D Object Pose Tracking

(Video credit: University of Illinois)