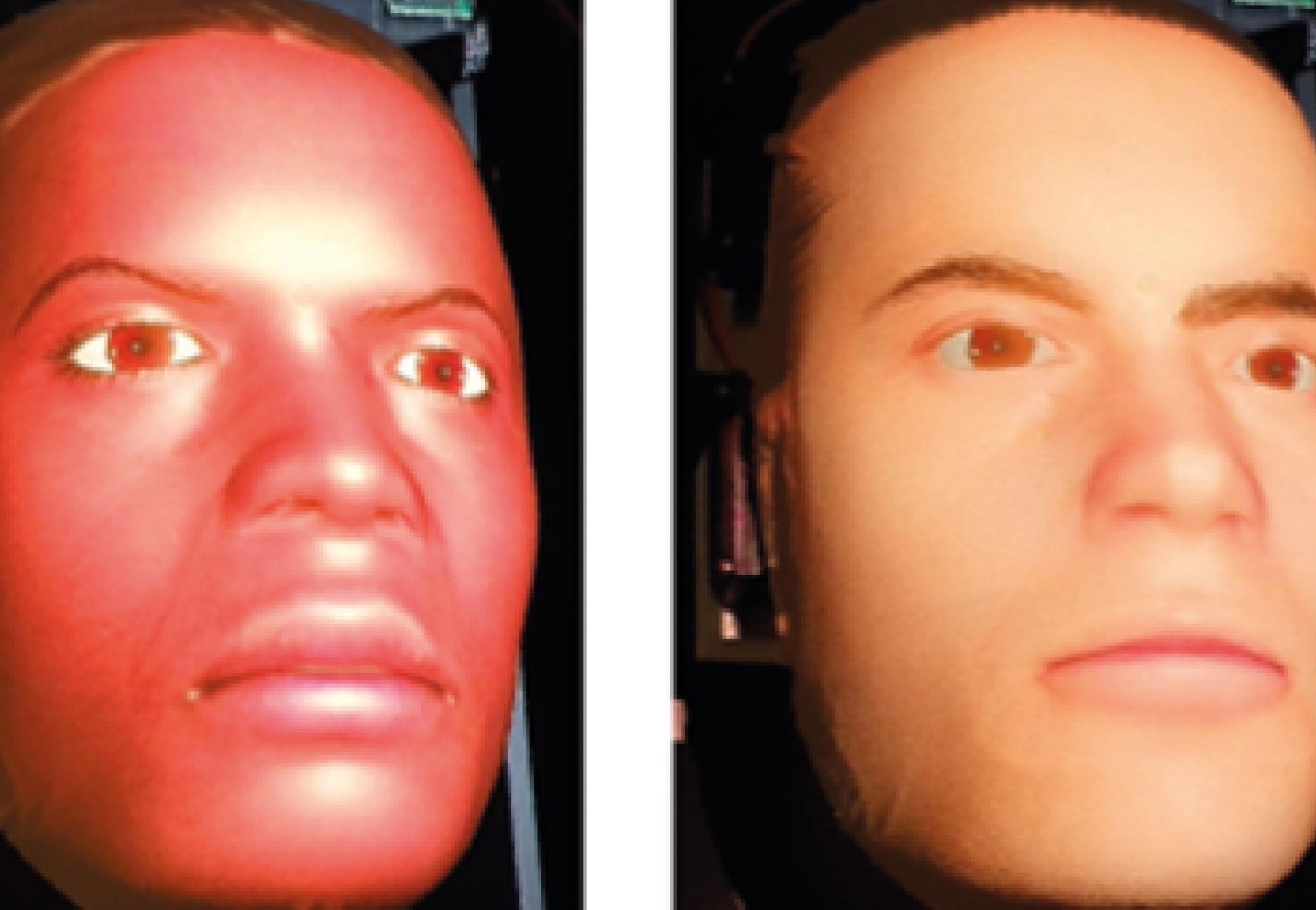

Image Credit: Imperial College London.

By exposing medical students to a larger range of patient identities, the method might also help identify and address early indicators of bias.

Improving the accuracy of facial expressions of pain on these robots is a key step in improving the quality of physical examination training for medical students.

Sibylle Rérolle, Study Author, Dyson School of Design Engineering, Imperial College London

Understanding Facial Expressions: About the Findings

Undergraduate students were given the task of doing a physical examination on the stomach of a robotic patient for the study. To imitate pain-related facial emotions, data regarding the force applied to the abdomen was utilized to induce changes in six distinct parts of the robotic face, known as MorphFace.

The order in which distinct parts of a robotic face, termed as facial activation units (AUs), should trigger to give the most realistic expression of pain was discovered using this approach. The study also found the most optimal AU activation speed and magnitude.

The researchers discovered that when the upper face AUs (around the eyes) was triggered first, followed by the lower face AUs, the most realistic facial emotions were achieved (around the mouth). A longer delay in activating the Jaw Drop AU, in particular, gave the most natural effects.

The article also discovered that participants’ perceptions of the robotic patient’s pain were influenced by gender and ethnic disparities between them and the patient, and these perceptual biases influenced the force used during physical examination.

White participants, for example, thought that shorter pauses on White robotic faces were the most realistic, but Asian participants thought that longer waits were more real. As participants applied more consistent force to various White robotic patients when they felt the robot was expressing real expressions of agony, this perception bias influenced the forces exerted by White and Asian participants to different White robotic patients throughout the examination.

The Importance of Diversity in Medical Training Simulators

The feedback of patient facial expressions becomes vital when doctors undertake physical examinations of painful locations. However, many existing medical training simulators are unable to portray real-time pain-related facial expressions and only have a small number of patient identities in terms of race and gender.

According to the researchers, these limitations might lead to discriminatory practices among medical students, with research already revealing racial prejudice in the capacity to recognize facial signals of pain.

Previous studies attempting to model facial expressions of pain relied on randomly generated facial expressions shown to participants on a screen. This is the first time that participants were asked to perform the physical action which caused the simulated pain, allowing us to create dynamic simulation models.

Jacob Tan, Study Lead Author, Dyson School of Design Engineering, Imperial College London

Participants were asked to evaluate the accuracy of the facial expressions on a scale of “strongly disagree” to “strongly agree,” with the researchers using this information to determine the most real order of AU activation.

The research included sixteen participants, who were a combination of males and females of Asian and White races. Each participant completed 50 examination trials on four different robotic patient identities: Black female, Black male, White female and White male.

Underlying biases could lead doctors to misinterpret the discomfort of patients - increasing the risk of mistreatment, negatively impacting doctor-patient trust, and even causing mortality. In the future, a robot-assisted approach could be used to train medical students to normalize their perceptions of pain expressed by patients of different ethnicity and gender.

Thilina Lalitharatne, Study Co-Author, Dyson School of Design Engineering, Imperial College London

Next Steps

Dr. Thrishantha Nanayakkara, the director of Morph Lab, advised against extrapolating these findings to other participant-patient interactions that are not included in the research.

Dr. Thrishantha Nanayakkara added, “Further studies including a broader range of participant and patient identities, such as Black participants, would help to establish whether these underlying biases are seen across a greater range of doctor-patient interactions.”

“Current research in our lab is looking to determine the viability of these new robotic-based teaching techniques and, in the future, we hope to be able to significantly reduce underlying biases in medical students in under an hour of training,” Nanayakkara concluded.

Journal Reference:

Tan, Y., et al. (2022) Simulating dynamic facial expressions of pain from visuo‑haptic interactions with a robotic patient. Scientific Reports. doi.org/10.1038/s41598-022-08115-1.