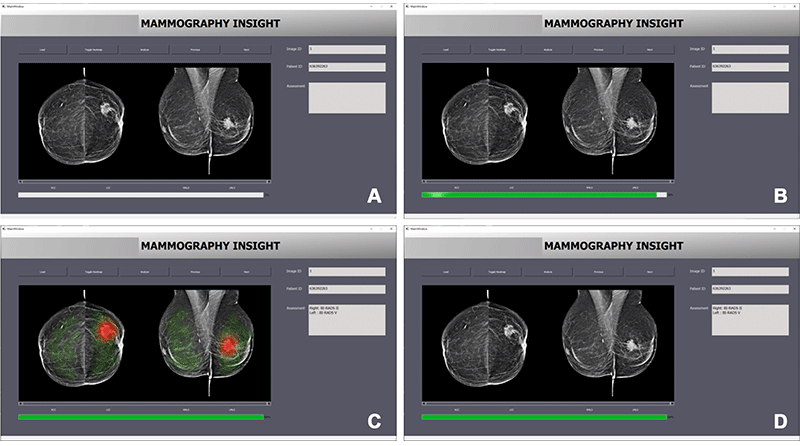

Interface of the artificial intelligence–based diagnostic system. Images show interface appearance when (A) loading a new mammogram, (B) performing the image evaluation, (C) displaying the results of the image evaluation via a heatmap, and (D) displaying the results of the image evaluation with the heatmap turned off. Image Credit: https://doi.org/10.1148/radiol.222176 © RSNA 2023

Frequently touted as a “second set of eyes” for radiologists, AI-based mammographic support systems are known to be one of the most exciting applications for AI in radiology. Since there is an expansion in the technology, there are worries that it might make radiologists vulnerable to automation bias—the tendency of humans to favor recommendations from automated decision-making systems.

Numerous studies have shown that the initiation of computer-aided detection into the mammography workflow could impact the performance of the radiologist. However, so far, no studies have looked at the impact of AI-based systems on the performance of precise mammogram readings by radiologists.

Scientists from institutions in Germany and the Netherlands set out to identify how automation bias could affect radiologists at different levels of expertise when reading mammograms assisted by an AI system.

Nearly 27 radiologists read 50 mammograms in the experiment. Further, they offered their Breast Imaging Reporting and Data System (BI-RADS) assessment aided by an AI system.

BI-RADS is a standard system that has been utilized by radiologists to explain and classify breast imaging findings. While BI-RADS categorization is not considered to be a diagnosis, it is vital in helping doctors identify the next steps in care.

In two randomized sets, mammograms were presented by the researchers. The first one was a training set of 10 in which the AI recommended the proper BI-RADS category. The second set consisted of incorrect BI-RADS categories, purportedly suggested by AI, in 12 of the 40 mammograms.

The outcomes displayed that the radiologists were considerably worse at assigning the proper BI-RADS scores for the cases in which the purported AI recommended an incorrect BI-RADS category. For instance, inexperienced radiologists allocated the proper BI-RADS score in nearly 80% of cases in which the AI denoted the correct BI-RADS category.

When the wrong category was suggested by the purported, their precision fell to below 20%. Experienced radiologists—those having over 15 years of experience on average—witnessed their accuracy fall from 82% to 45.5% when the purported AI suggested the faulty category.

Findings Emphasize Need for Appropriate Safeguards

We anticipated that inaccurate AI predictions would influence the decisions made by radiologists in our study, particularly those with less experience.

Thomas Dratsch, MD, PhD, Study Lead Author, Institute of Diagnostic and Interventional Radiology, University Hospital Cologne

Dratsch added, “Nonetheless, it was surprising to find that even highly experienced radiologists were adversely impacted by the AI system’s judgments, albeit to a lesser extent than their less seasoned counterparts.”

The scientists stated that the outcomes show why the effects of human-machine interaction should be considered cautiously to guarantee safe deployment and precise diagnostic performance while human readers and AI are combined.

Given the repetitive and highly standardized nature of mammography screening, automation bias may become a concern when an AI system is integrated into the workflow. Our findings emphasize the need for implementing appropriate safeguards when incorporating AI into the radiological process to mitigate the negative consequences of automation bias.

Thomas Dratsch, MD, Ph.D., Study Lead Author, Institute of Diagnostic and Interventional Radiology, University Hospital Cologne

Practicable safeguards include presenting users with the confidence levels of the decision support system. In the event of an AI-based system, this could be performed by displaying the probability of every output.

Teaching users about the reasoning process of the system has been included in another strategy. Guaranteeing that the users of a decision support system feel liable for their decisions could also help reduce automation bias, stated Dr. Dratsch.

The scientists plan to make use of tools like eye-tracking technology to better comprehend the decision-making process of radiologists with the help of AI.

Moreover, we would like to explore the most effective methods of presenting AI output to radiologists in a way that encourages critical engagement while avoiding the pitfalls of automation bias.

Thomas Dratsch, MD, Ph.D., Study Lead Author, Institute of Diagnostic and Interventional Radiology, University Hospital Cologne

Journal Reference

Dratsch, T., et al. (2023) Automation Bias in Mammography: The Impact of Artificial Intelligence BI-RADS Suggestions on Reader Performance. Radiology. doi.org/10.1148/radiol.222176.