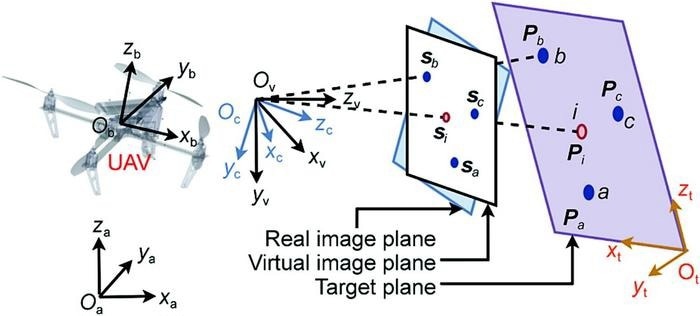

Reference coordinate frames of the UAV system. Image Credit: Yanjie Chen, Yangning Wu, Limin Lan, Hang Zhong, Zhiqiang Miao, Hui Zhang, Yaonan Wang

The research article outlines a comprehensive method that addresses the challenges of estimating target velocities, image depth estimation, and tracking stability in the presence of external disturbances.

The proposed method uses a constructed virtual camera to derive simplified and decoupled image dynamics for underactuated UAVs.

By considering the uncertainties caused by unpredictable rotations and velocities of dynamic targets, the researchers have developed a unique image depth model that extends the IBVS method to track rotating targets with arbitrary orientations. This model helps to ensure accurate image feature tracking and smooth trajectory of the rotating target.

A velocity observer has also been developed to evaluate the comparative velocities between the UAV and the dynamic target. This observer removes the need for translational velocity measurements and reduced control chatter resulting from noise-containing measurements.

Additionally, an integral-based filter has been introduced to compensate for unpredictable environmental disturbances, thereby enhancing the anti-disturbance ability of the UAV.

The stability of the velocity observer and IBVS controller has been thoroughly investigated using the Lyapunov method. Comparative simulations and multistage experiments have also been carried out to demonstrate the tracking stability, anti-disturbance ability, and tracking robustness of the proposed method with a dynamic rotating target.

Key contributions of this study include:

- Novel image depth model: The scientists have suggested a special image depth model that precisely removes image depth without requiring rotation information of the tracked target. This model allows the application of the suggested IBVS controller for tracking dynamic rotating targets with arbitrary orientations.

- Velocity observer: A committed velocity observer has been developed to estimate relative velocities between the dynamic target and the UAV. This enables the suggested technique to be used in GPS-denied surroundings while reducing control chatter resulting from noise-containing velocity measurements.

- Integral-based filter: An integral-based filter has been made to evaluate and compensate for erratic disturbances, such as the acceleration of environmental disturbances and dynamic targets. This improves the UAV’s potential to tackle unidentified movements of external disturbances and dynamic targets.

In the end, this study presents a dynamic IBVS technique that considerably enhances UAVs' tracking performance in unstable disturbances. By using a novel image depth model, a velocity observer, and an integral-based filter, the suggested method illustrates improved anti-disturbance ability, tracking stability, and robustness.

The method’s stability has been completely examined with the help of Lyapunov theory, and simulations and experiments have been performed to confirm its effectiveness.

Journal Reference:

Chen, Y., et al. (2023) Dynamic Target Tracking of Unmanned Aerial Vehicles Under Unpredictable Disturbances. Engineering. doi.org/10.1016/j.eng.2023.05.017.