This approach achieved improved mapping accuracy and greater scalability, particularly for long image sequences, without requiring pre-calibrated cameras or complex tuning, making it highly scalable for real-world applications like search-and-rescue and industrial automation.

Solving AI’s Mapping Limitations

The ability for a robot to simultaneously map its environment and locate itself within it, a challenge known as SLAM, is a foundation of autonomous navigation. This capability is critically important in time-sensitive scenarios, such as a search-and-rescue robot navigating a collapsed mine, where it must process thousands of images to quickly generate a comprehensive 3D map of treacherous terrain.

While machine-learning models have made SLAM more accessible by learning reconstruction directly from data, even the most advanced system can only handle a few dozen images at once. This limitation makes them impractical for use in large, diverse environments. The real challenge is scaling these AI-driven reconstructions without losing accuracy or speed. This problem is rooted in the distortions and ambiguities that neural networks introduce when processing large amounts of visual data from uncalibrated cameras.

The Submap Solution and the Unforeseen Challenge of Ambiguity

To overcome the scaling problem, the MIT team, led by graduate student Dominic Maggio, devised an intuitive approach. Instead of forcing a single AI model to process an entire scene at once, their VGGT-SLAM system breaks the environment into smaller, more manageable submaps.

The model generates these dense 3D reconstructions incrementally, and the initial plan was to merge them using classical methods of rotation and translation. However, this seemingly straightforward solution failed in practice. The reason, as Maggio discovered through an analysis of classical computer vision literature, was an issue known as reconstruction ambiguity.

When AI models process images from an uncalibrated camera, the resulting 3D submaps are not perfect representations of reality; instead, they are often deformed, with walls that may be slightly bent or dimensions that are subtly stretched. These deformations are not random but are consistent within each submap, and stem from the model’s uncertainty about the camera's parameters and the scene's geometry.

Consequently, a simple rigid alignment is inadequate because it tries to fit together pieces that are fundamentally warped in different ways. This revelation highlighted that the problem was not just one of scale, but one of geometric consistency, requiring a much more flexible mathematical framework to achieve a coherent global map.

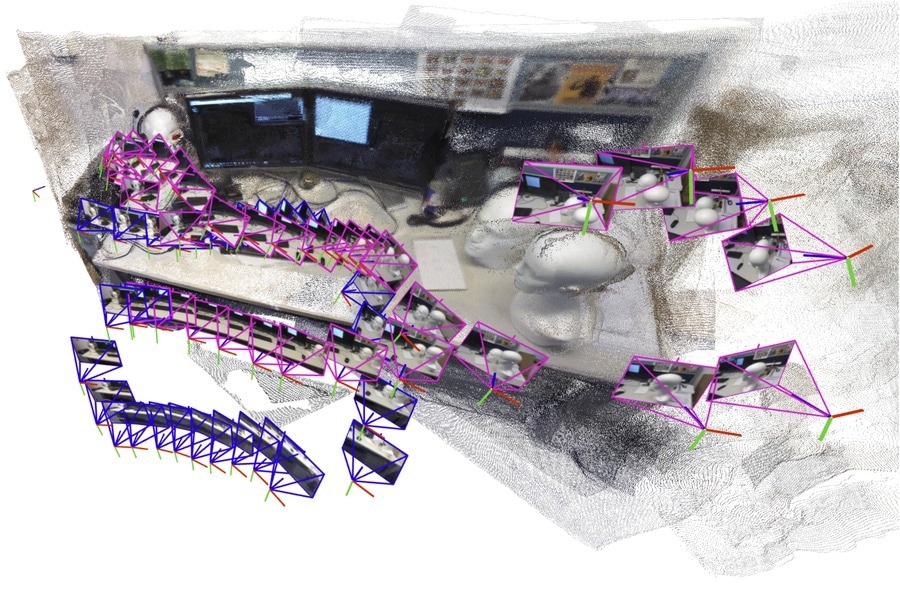

The AI-driven system combines smaller submaps of a scene to generate a full 3D map, like this office cubicle

Bridging AI with Classical Geometry

The breakthrough came from combining the modern, data-driven world of AI with the foundational principles of classical geometry. The researchers realized that to align these deformed submaps accurately, they needed a mathematical technique capable of representing a much wider range of transformations.

While previous methods used a similarity transform (accounting for rotation, translation, and uniform scale), the VGGT-SLAM system introduces a far more powerful approach. It optimizes transformations over the SL(4) manifold, effectively estimating a 15-degree-of-freedom homography between sequential submaps. This sophisticated mathematical tool can account for the complex projective deformations (bends and stretches) that the AI model introduces, consistently warping each submap so that they can be seamlessly stitched together.

This process also incorporates loop closure constraints, ensuring that when a robot revisits a location, the map aligns perfectly, correcting for any accumulated drift. The result is a system that preserves the simplicity of a learning-based model and works immediately with any uncalibrated monocular camera, including a standard smartphone. However, the authors note that certain scenes, especially planar or low-parallax environments, can still present challenges.

It generates dense 3D reconstructions with competitive accuracy compared to state-of-the-art systems, and it demonstrates strong performance on established benchmarks.

Expanding Real-World Mapping

In conclusion, the development of VGGT-SLAM represents a significant leap forward in robotic perception, demonstrating that the key to scalability often lies in a hybrid intelligence. By fusing the representational power of modern neural networks with the deep geometric understanding of classical computer vision, the researchers have created a system that is simultaneously simple, fast, and highly accurate.

This work proves that for robots to perform increasingly complex tasks in unpredictable real-world environments, they require not just more data, but a more profound and consistent model of geometric space.

The success of this approach also highlights current limitations, such as difficulties with planar scenes and sensitivity to outlier depth estimates, highlighting the need for more reliable search-and-rescue robots, efficient industrial automation, and immersive extended reality applications, all built on a mapping foundation that is both powerful and practical to deploy.

Download your PDF copy now!

Journal Reference

Zewe, A. (2025, November). Teaching robots to map large environments. MIT News | Massachusetts Institute of Technology. https://news.mit.edu/2025/teaching-robots-to-map-large-environments-1105

Maggio, D., Lim, H., & Carlone, L. (2025). VGGT-SLAM: Dense RGB SLAM Optimized on the SL(4) Manifold. ArXiv.org. DOI:10.48550/arXiv.2505.12549. https://arxiv.org/abs/2505.12549

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.