Pulkit Agrawal is the Steven and Renee Chair Professor in the Department of Electrical Engineering and Computer Science at MIT and heads the Improbable AI Lab. He was the lead PI for this work. Gabriel Margolis is a first-year Ph.D. candidate at MIT in the Improbable AI Lab. He was the lead author of the paper about this work, which he will present at the Conference on Robot Learning in November.

Can you give our readers an overview of your recent research?

If a human were crossing a large gap, they would first run to build up speed, plant their feet to apply explosive force for a big jump, and continue running on the other side to regain stability. However, this type of adaptive behavior is typically difficult for robots. We have designed a system that can adjust its gait like the human in that example to execute a sequence of jumps across gaps. The type of jump necessary depends on the size and location of the gap, which is why vision is also necessary – to perceive the gap.

What is meant by the term Depth-based Impulse Control (DIC)?

Depth-based Impulse Control (DIC) is a framework of designing controllers that can reason in terms of forces a robot should apply directly from depth data. Depth-based means that the system directly interprets depth images, with no intermediate reconstruction of the environment. Impulse Control means that the system explicitly plans impulses – the forces applied to the ground – and prioritizes tracking these over tracking a particular position. Reasoning in terms of forces, instead of just positions, is important for robots to carry out dynamic tasks such as jumping or pushing.

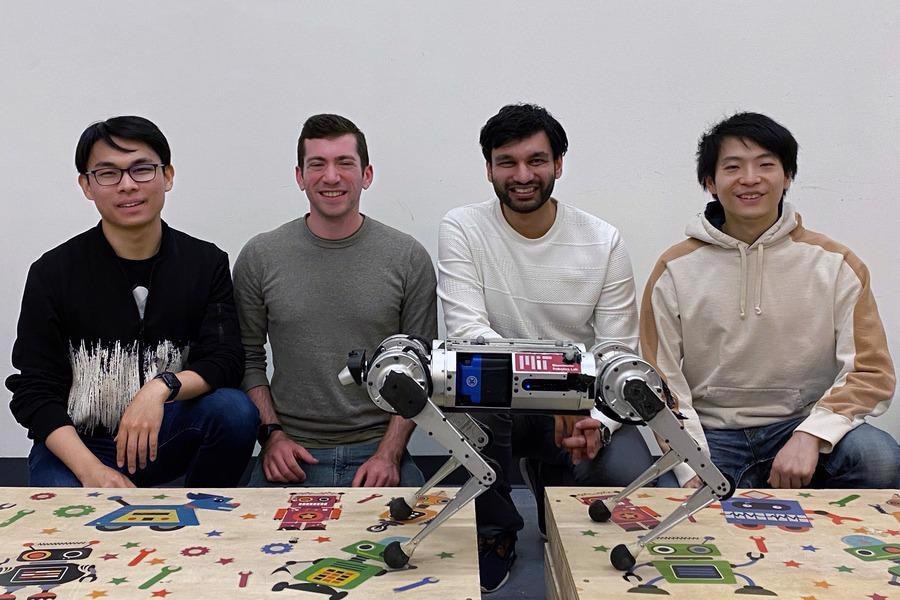

The MIT team, from left to right: Ph.D. students Tao Chen and Gabriel Margolis; Pulkit Agrawal, the Steven G. and Renee Finn Career Development Assistant Professor in the Department of Electrical Engineering and Computer Science; and Ph.D. student Xiang Fu. Image Credit: MIT/Electrical Engineering & Computer Science (EECS).

How does the control system work to ensure speed and agility? What are the benefits of incorporating two separate controllers into the system?

The high-level controller processes visual inputs to produce a trajectory of the robot's body and a 'blind' low-level controller ensures that the predicted trajectory is tracked. This separation eases the task for both the controllers: the high-level is shielded from intricacies of joint-level actuation and the low-level is not required to reason about visual observations, allowing us to leverage advances in blind locomotion.

We choose a low-level controller that reasons in terms of impulses that the robot applies to the ground, which provides a rich control representation for different fast and agile behaviors depending on the perception of the terrain.

One giant leap for the mini cheetah

Can you explain the processes involved when testing the system?

We tested the system both in simulation and in the real world. We evaluated the simulated robot over hundreds of different terrains with various gap sizes and spacing, providing it with simulated depth images from a renderer. After validating our method in simulation, we used the MIT Mini Cheetah robot to conduct experiments in the real world. We constructed gap environments with the help of wooden platforms that were placed at random distances from each other.

The team's work will be presented next month at the Conference on Robot Learning. Are there are features in particular that you will be highlighting?

At CoRL, we look forward to highlighting the speed and agility with which our robot can traverse discontinuous terrains by using learning to bypass traditional perception and planning pipelines. We hope that our work provokes conversation about the appropriate roles of model-based and model-free control and how vision can be used in locomotion to exploit the hardware limits of speed, energy, and robustness on varied terrains.

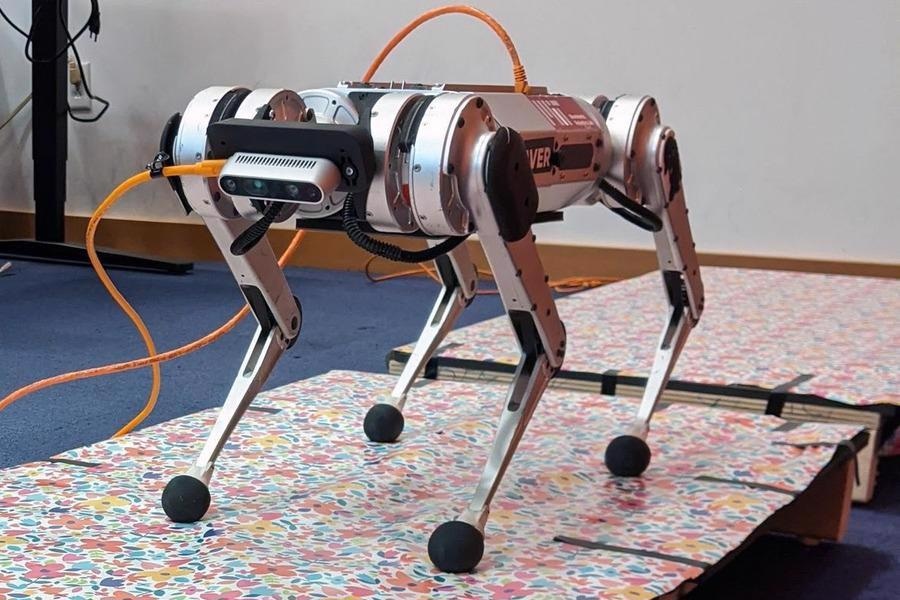

Researchers from MIT have developed a system that improves the speed and agility of legged robots as they jump across gaps in terrain. Image Credit: MIT/Electrical Engineering & Computer Science (EECS)

Can you give our readers an insight into the reinforcement learning method that was used?

The learned component of our system was trained using Proximal Policy Optimization (PPO), a common on-policy reinforcement learning algorithm. We rewarded the robot for making forward progress and penalized it for moving dangerously quickly. The algorithm took about 6000 training episodes, or 60 hours of simulated locomotion, to converge to its maximum reward.

We initially tried training the policy directly from depth images. In the end, we found that it was more efficient to perform reinforcement learning using terrain elevation maps, rather than depth images, and to apply a technique called "asymmetric-information behavioral cloning" to convert the learned policy to use depth images.

In asymmetric-information behavioral cloning, a 'teacher' policy learns from privileged information (e.g., terrain elevation maps), but this privileged information is not accessible to the robot using its sensors in the real world. Then, a 'student' policy is trained to imitate the teacher's actions without any privileged information. In this way, the student policy can be deployed in the real world.

What additional features need to be developed before the system can be used outside of the lab?

(1) Improving the accuracy of the onboard state estimation system; our current state estimation is noisy during highly agile motions. (2) More compute; the robot only has a small onboard computer for now and we handle some computation offboard. (3) Better low-level controllers that are aware of the kinematic structure of the robot.

What are your predictions for the future of mobile robotics?

Mobile robots are already deployed in industrial setups (e.g., Kiva) and in our homes (e.g., Roomba). However, they are mostly restricted to environments where the ground is flat. Imagine operating a mobile robot in the wild, where it needs to walk over diverse terrains and jump over barriers such as rivers and gaps. Our work is enabling that. The other aspect to consider is mobile manipulation, which has remained a complex problem, but the field is inching closer towards it. Learning-based methods can move offline the computational cost of planning, a cost that grows exponentially on a mobile manipulator.

What is next for the team at MIT?

As an immediate next step, we plan to make the necessary improvement to take our system into the wild. We also plan to investigate expanded task specifications for locomotion; until now, it was hard just for robots to remain upright, so people didn't think much about objectives like energy efficiency, speed, and robustness. Now that locomotion systems are becoming better and better at moving in diverse ways, we can consider how the selection of locomotion behaviors can be performed to balance tradeoffs between locomotion objectives while navigating complex scenes.

Where can readers find more information?

Project website: https://sites.google.com/view/jumpingfrompixels

Video: https://www.youtube.com/watch?v=UqwldNLHE9w

Paper: https://openreview.net/pdf?id=R4E8wTUtxdl

About Pulkit Agrawal and Gabriel Margolis

Dr. Pulkit Agrawal is the Steven and Renee Finn Chair Professor in the Department of Electrical Engineering and Computer Science at MIT. He earned his Ph.D. from UC Berkeley and co-founded SafelyYou Inc.

Dr. Pulkit Agrawal is the Steven and Renee Finn Chair Professor in the Department of Electrical Engineering and Computer Science at MIT. He earned his Ph.D. from UC Berkeley and co-founded SafelyYou Inc.

His research interests span robotics, deep learning, computer vision and reinforcement learning. Pulkit completed his bachelor's from IIT Kanpur and was awarded the Director's Gold Medal. He is a recipient of the Sony Faculty Research Award, Salesforce Research Award, Amazon Machine Learning Research Award, Signatures Fellow Award, Fulbright Science and Technology Award, Goldman Sachs Global Leadership Award, OPJEMS, and Sridhar Memorial Prize, among others.

Gabriel Margolis is a Ph.D. candidate at the Massachusetts Institute of Technology, working on Embodied Intelligence. His research is concerned with making effective use of vision in contact-rich robotic control tasks. Margolis is advised at MIT CSAIL by Prof. Pulkit Agrawal. He received his MEng and BS in EECS and Aerospace Engineering at MIT.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.