Although easily accessible information technology and flexible work methods allow for the personalization of goods or services for individual customers in large quantities, mass customization can still lead to unnecessary complexity and cost. Customers are no longer considered as members of a single market segment. On the other hand, as customization becomes more common, the concept of markets must be restructured.

Rather than concentrating on homogeneous markets and average offerings, mass customizers have recognized the dimensions across which their consumers’ requirements differ. These points of popular distinctiveness indicate where each customer differs. Furthermore, it is at these focuses that traditional offerings, crafted for average needs, create disparity in customer satisfaction: the gap between what a company is offering and what each customer truly wants.

The methods proposed in this paper—termed Interactive Refinement Programming (IRP)—enables non-expert users to create a new production process by incrementally polishing built-in standard and parametric tasks.

The study published in the MDPI journal Robotics gives an overview of the proposed method, the used hardware, and the designed software. It also discusses the IRP procedure in depth. Economic analysis to evaluate a standardized method of assessing robotic investment is presented. The industrial test case and outcomes are explained, and finally, findings are stated.

Methodology

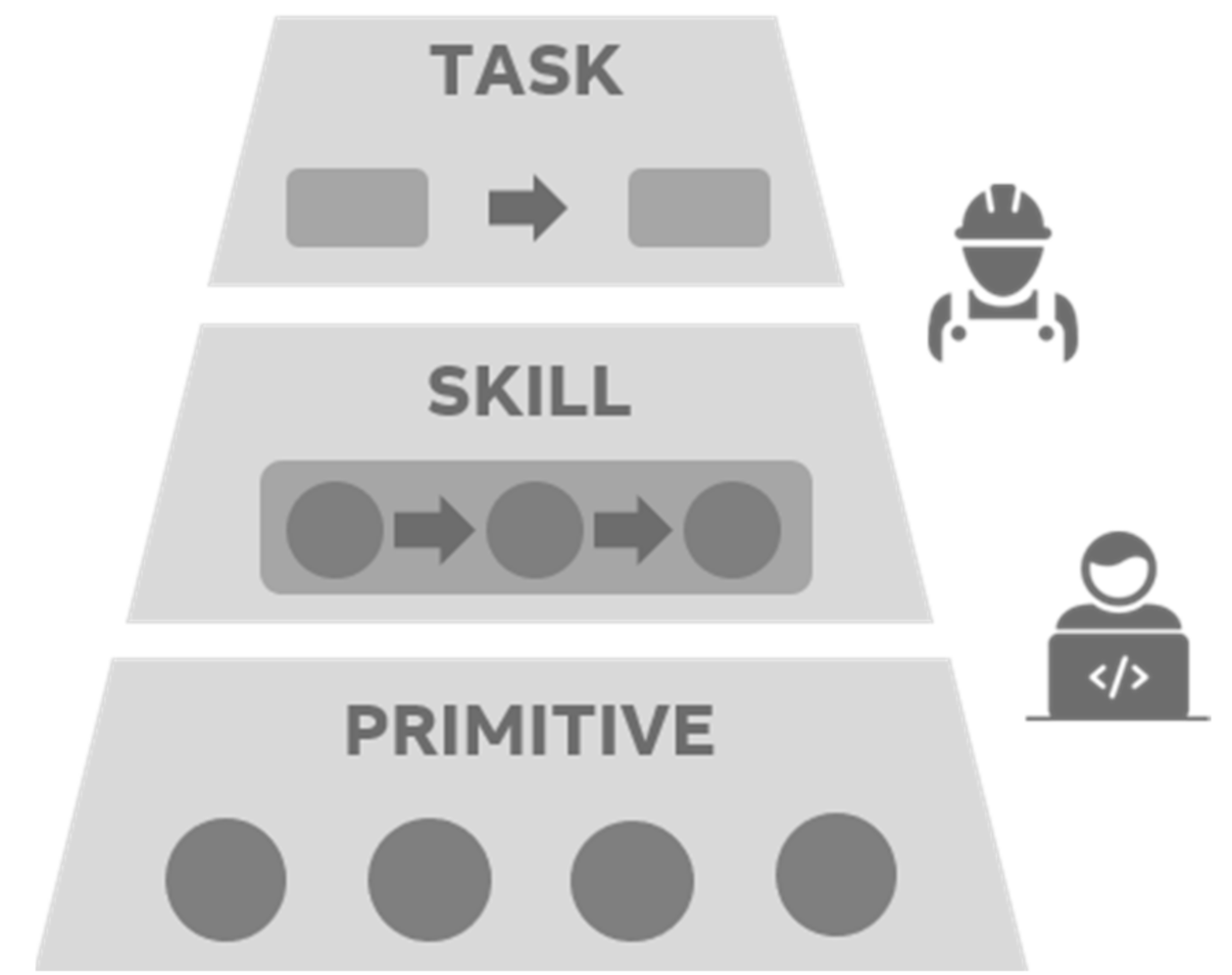

To acquire a tool that can be managed totally by a familiar automation worker, a pyramidal parameterized programming approach was used: a layered pyramidal framework composed of Primitives, Skills, and Tasks, as shown in Figure 1.

Figure 1. Layered pyramidal framework. Image Credit: Giberti, et al., 2022

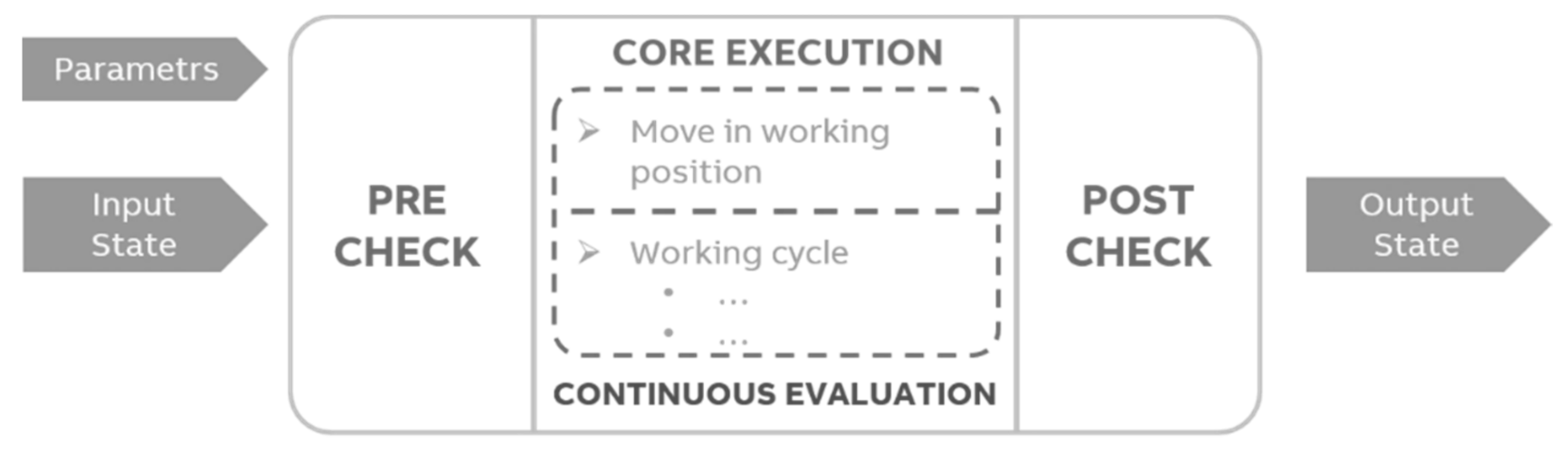

The structure of the considered Skills is separated into three main parts, as shown in Figure 2.

Figure 2. Structure of a standard and parametric skill. Image Credit: Giberti, et al., 2022

Tasks are created at the top of Figure 1’s layered pyramidal framework by connecting different Skills in a specific order. The sequence can also have various branches, like a state machine, with each block being performed when the value of the input state is accurate. Various sections of the sequence can thus be implemented based on what has occurred previously.

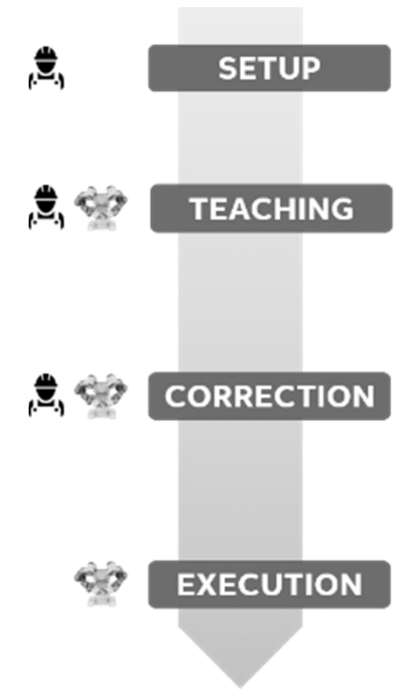

The image below depicts the IRP procedure, which allows non-expert users to carry out the teaching and correction phases, eventually leading to the execution of tasks. Figure 3 depicts the major steps of the IRP.

Figure 3. Steps of the Interactive Refinement Procedure (IRP). Image Credit: Giberti, et al., 2022

This phase, known as the Translation Phase, includes designing and developing appropriate hardware or software components if specific hardware or software adjustments, which differ from the general bundle, are needed.

Following the completion of the Translation Phase, the operator in the production department obtains a list with the series of Skills and guidelines to handle manufacturing.

During the teaching phase, the user accesses the cobot’s interaction capabilities, supported by the manager software, to set the correct parameters for the implementation of each skill. This process can be carried out in a variety of ways.

Moreover, the implementation may still discover inconsistencies that should be identified and rectified ahead of time. As a result, a Test and Correction phase is carried out, in which each operation is carried out at a slower rate than the exact executing speed, allowing the operator to oversee and respond if any inaccuracy or problem occurs.

The Task is now complete and ready for operation. It is likely to start with a Test Execution and then repeat the Teaching and Correction steps. When everything is in order, the production process can begin.

Results and Discussion

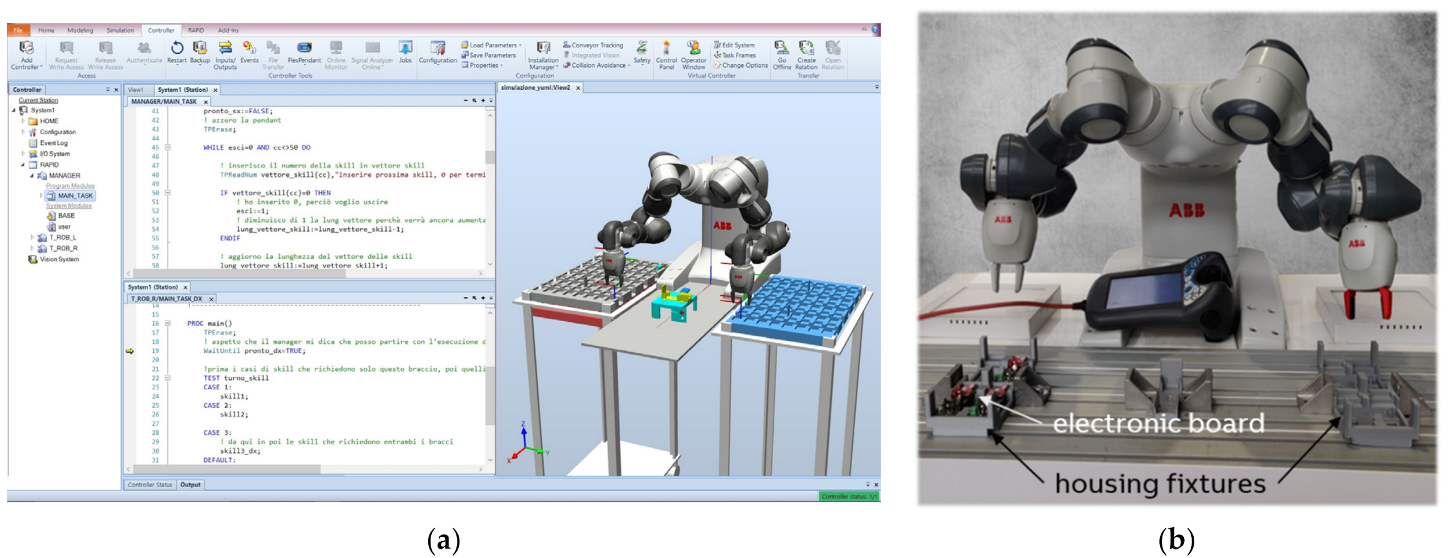

For evaluating various processes in the context of robotic implementation, a specific N-year performance indicator called Implementation Efficiency has been developed. In partnership with the ABB Building Automation department in Vittuone, the proposed methodology was tested in an industrial setting (Milan, Italy).

Figure 4 shows an example of the code used to generate the program manager code (Figure 4a), as well as a frame of the developed robotic procedure, which shows that the two arms can function at the same time (Figure 4b). The fixture particularly designed to accommodate the electronic boards, for each of the two arms, is visible in the figure.

Figure 4. Implemented Firmware up-date process for security sensor electronic boards. (a) Software development for manager program. (b) View of the two Yumi arms operating at the same time. Image Credit: Giberti, et al., 2022

Table 1 shows the average teaching and modification times of the two non-expert users after many trials, as well as the maximum and minimum times, in the second column.

Table 1. Times spent on teaching and correction phase by non-expert robotic users. Source: Giberti, et al., 2022

| Skills |

Teaching and Correction Time

(average of 2nd, 3rd and 4th attempts for both

the operators) |

1st Attempt Time

(average time on two operators) |

Pick a Piece from the multi-board

4 × 4 tray module |

1′41″ |

max 1′48″ |

2′06″ |

| min 1′38″ |

| Place a piece on the fixture |

2′30″ |

max 2′39″ |

3′01″ |

| min 2′21″ |

| Connect to PC |

- |

|

Pick a piece from the fixture

(after SW updating) |

1′49″ |

max 1′58″ |

2′11″ |

| min 1′41″ |

Place a piece on the output tray

(after SW updating) |

1′54″ |

max 2′02″ |

2′19″ |

| min 1′43″ |

Table 2 shows the time required for the slow speed tests, which are performed as a test after the teaching and correction phases for the entire task’s skills that are completed, along with the final cycle time per piece. By adding the times from Tables 1 and 2, it is possible to see that the entire application was formed in less than 10 minutes.

Table 2. Slow speed execution time and final cycle time. Source: Giberti, et al., 2022

| . |

. |

Slow-speed test (i.e., test of the complete task executed

after defining the input parameter for each skill) |

53″ |

| Cycle time per piece |

37″ |

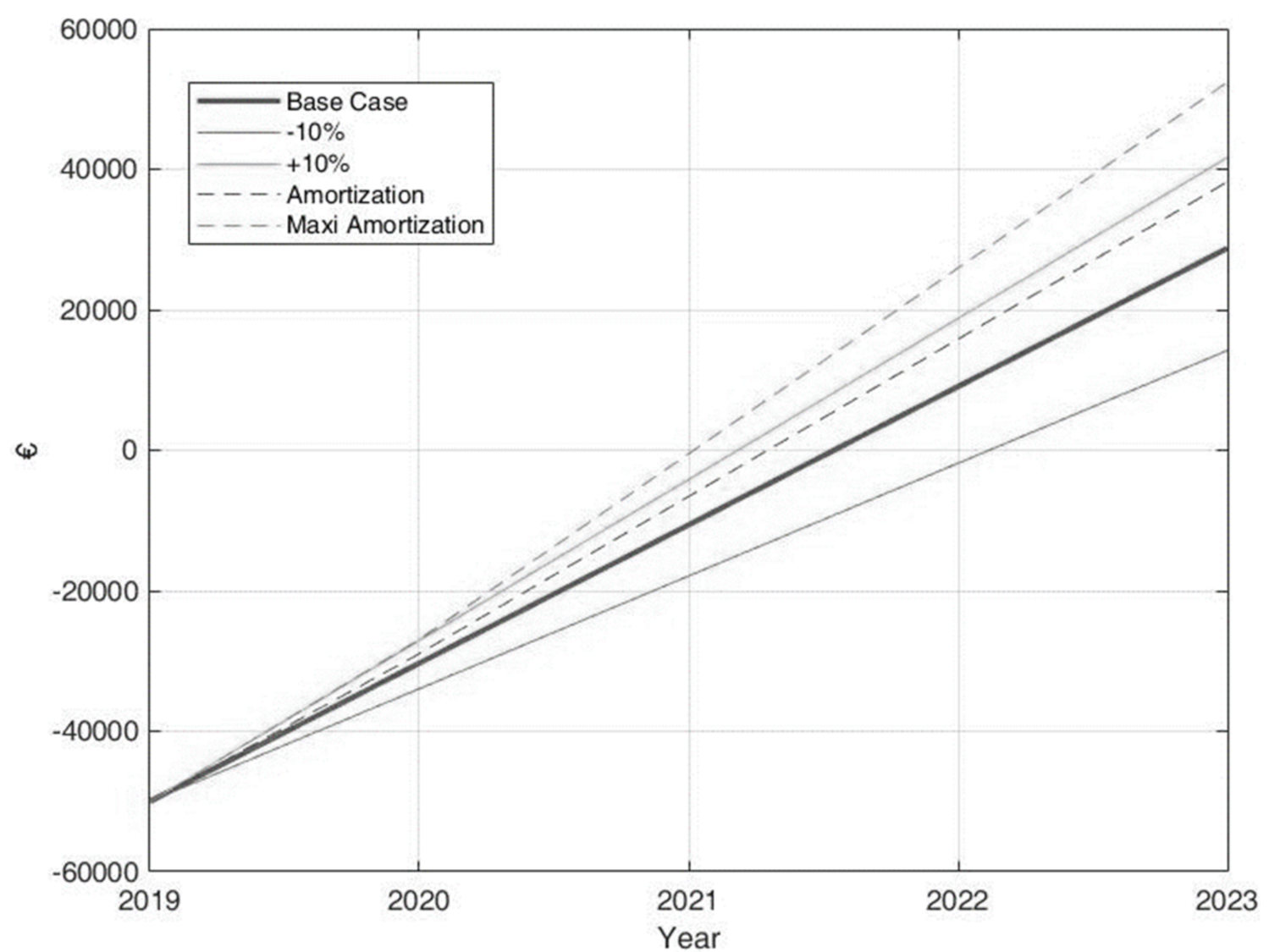

Table 3 presents a synthesis of the main input data for each of the previously discussed processes. Figure 5 depicts the mentioned payback scenarios.

Table 3. Single process data synthesis. Source: Giberti, et al., 2022

| Process |

Firmware

Update |

Remote

Controller |

USB

Charger |

CTS

(Bottom) |

CTS

(Top) |

Custom |

| YuMi allocated time |

24 s

(39%) |

67 s

(60%) |

34.5 s

(81%) |

93 s

(95%) |

165 s

(94%) |

24 s

(75%) |

| YuMi setup time |

57

min |

65

min |

69

min |

89

min |

53

min |

45

min |

| average lot size |

150

pcs |

50

pcs |

135

pcs |

280

pcs |

280

pcs |

200

pcs |

| pieces per year |

3899

pcs |

576

pcs |

2440

pcs |

9112

pcs |

9880

pcs |

2039

pcs |

| lot size threshold |

139

pcs |

56

pcs |

117

pcs |

56

pcs |

19

pcs |

108

pcs |

| saved person-hours |

10.5

h |

5.6

h |

10

h |

195.6

h |

426.9

h |

7.7

h |

| efficiency (N = 2) |

8.19% |

−1.31% |

17.37% |

75.16% |

86.20% |

19.51% |

Figure 5. Comparison of Payback time scenarios. Image Credit: Giberti, et al., 2022

Conclusion

The proposed IRP is a method aimed at facilitating the invention of a robotic application, allowing non-expert users to actively set up a robot steered by the machine itself, and fixing any mistakes that occur using an optimization technique.

The only thing non-expert shop floor users have to do is offer a good set of input parameters for each skill during a Teaching Phase, which would improve the quality of each skill to the particular process, and a Correction Phase to fix any errors that may occur.

The proposed method is based using a real-world industrial case—in which the base software and hardware required for the application were built by expert engineers while the actual set-up of the robotic application was put in place in a very short time—by non-expert users, demonstrating the method’s flexibility. Because of simple programming efforts, the complexity of developing a robotic application has been reduced significantly.

The results presented here are a step forward in the execution of robotics in craft manufacturing, overcoming the limitations of traditional automation and existing collaborative robot use.

Journal Reference:

Giberti, H., Abbattista, T., Carnevale, M., Giagu, L., Cristini, F. (2022) A Methodology for Flexible Implementation of Collaborative Robots in Smart Manufacturing Systems. Robotics, 11(1), pp. 9. Available Online: https://www.mdpi.com/2218-6581/11/1/9/htm.

References and Further Reading

- Cavadias, S., et al. (2016) The Transformative Business Model. Harvard Business Review. Available at https://www.apdata.com/

- Gilmore, J H & Pine, B J (1997) The four faces of mass customization. Harvard Business Review, 75, pp. 91–101.

- Rosenstrauch, M. J., et al. (2018) Human robot collaboration—Using Kinect v2 for ISO/TS 15066 speed and sepa-ration monitoring. Procedia CIRP, 76, pp. 183–186.

- Duque, D. A., et al. (2019) Trajectory generation for robotic assembly operations using learning by demonstration. Robotics and Computer-Integrated Manufacturing, 57, pp. 292–302. doi.org/10.1016/j.rcim.2018.12.007.

- Popov, V., et al. (2019) Gesture-based Interface for Real-time Control of a Mitsubishi SCARA Robot Manipulator. IFAC-PapersOnLine, 52, pp. 180–185. doi.org/10.1016/j.ifacol.2019.12.469.

- Kaczmarek, W., et al. (2020) Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode. Sensors, 20, p. 6358. doi.org/10.3390/s20216358.

- Kaczmarek, W., et al. (2021) Controlling an Industrial Robot Using a Graphic Tablet in Offline and Online Mode. Sensors, 21, p. 2439. doi.org/10.3390/s21072439.

- Benotsmane, R., et al. (2020) Trajectory Optimization of Industrial Robot Arms Using a Newly Elaborated “Whip-Lashing” Method. Applied Sciences, 10, p. 8666. doi.org/10.3390/app10238666.

- Urrea, C & Jara, D (2021) Design, Analysis, and Comparison of Control Strategies for an Industrial Robotic Arm Driven by a Multi-Level Inverter. Symmetry, 13, p. 86. https://doi.org/10.3390/sym13010086.

- Gašpar, T., et al. (2020) Smart hardware integration with advanced robot programming technologies for efficient reconfiguration of robot workcells. Robotics and Computer-Integrated Manufacturing, 66, p. 101979. doi.org/10.1016/j.rcim.2020.101979.

- Djuric, A. M., et al. (2016) A Framework for Collaborative Robot (CoBot) Integration in Advanced Manufacturing Systems. SAE International Journal of Materials and Manufacturing, 9, pp. 457–464. doi.org/10.4271/2016-01-0337.

- Michalos, G., et al. (2015) Design Considerations for Safe Human-robot Collaborative Workplaces. Procedia CIRP, 37, pp. 248–253. doi.org/10.1016/j.procir.2015.08.014.

- Lee, J., et al. (2013) Recent advances and trends in predictive manufacturing systems in big data environment. Manufacturing Letters, 1, pp. 38–41. doi.org/10.1016/j.mfglet.2013.09.005.

- Wahrburg, A., et al. (2015) Combined pose-wrench and state machine representation for modeling Robotic Assembly Skills. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October; pp. 852–857. doi.org/10.1109/IROS.2015.7353471.

- Stenmark, M & Topp, E A (2021) From Demonstrations to Skills for High‐Level Programming of Industrial Robots (2016) AAAI Fall Symposium; Technical Report; ELLIIT: The Linköping‐Lund Initiative on IT and Mobile Communication Department of Computer Science Robotics and Semantic Systems FS-16-01–FS-16-05. pp. 75–78. Available at: https://portal.research.lu.se/en/publications/from-demonstrations-to-skills-for-high-level-programming-of-indus.

- Herrero, H., et al. (2017) Skill based robot programming: Assembly, vision and Workspace Monitoring skill interaction. Neurocomputing, 255, 61–70. doi.org/10.1016/j.neucom.2016.09.133.

- Pedersen, M. R., et al. (2016) Robot skills for manufacturing: From concept to industrial deployment. Robotics and Computer-Integrated Manufacturing, 37, pp. 282–291.doi.org/10.1016/j.rcim.2015.04.002.

- Sorensen, L. C., et al. (2020) Towards Digital Twins for Industrial Assembly—Improving Robot Solutions by Intuitive User Guidance and Robot Programming. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA2020), Vienna, Austria, 8–11 September; pp. 1480–1484.

- Schou, C., et al. (2013) Human-robot interface for instructing industrial tasks using kinesthetic teaching. IEEE ISR, pp. 1–6.

- Bøgh, S., et al. (2012) Does your Robot have Skills? In Proceedings of the 43rd International Symposium on Robotics, Taipei, Taiwan, 29–31 August.

- Pedersen, M R (2013) On the Integration of Hardware-Abstracted Robot Skills for use in In-dustrial Scenarios. In Proceedings of the 2nd International IROS Workshop on Cognitive Robotics Systems (CRS): Replicating Human Actions and Activities, Tokyo, Japan, 3–7 November.

- Akkaladevi, S. C., et al. (2019) Skill-based programming of complex robotic assembly tasks for industrial application [Skill-basierte Programmierung von komplexen Roboter-Montageaufgaben für die industrielle Applikation]. e & i Elektrotechnik und Informationstechnik, 136, pp. 326–333. doi.org/10.1007/s00502-019-00741-4.

- Andersen, R. S., et al. (2013) Fast calibration of industrial mobile robots to workstations using QR codes. IEEE ISR, 2013, pp. 1–6. https://doi.org/10.1109/ISR.2013.6695636.

- Hvilshøj, M., et al. (2010) Calibration Techniques for Industrial Mobile Manipulators: Theoretical configurations and Best practices (2010) Joint. In Proceedings of the 41st International Symposium on Robotics and 6th German Conference on Robotics ISR/ROBOTIK, Munich, Germany, 7–9 June; 2, pp. 773–779.

- Korayem, M. H., et al. (2021) Design and Implementation of the Voice Command Recognition and the Sound Source Localization System for Human–Robot Interaction. Robotics, 39, pp. 1779–1790. doi.org/10.1017/S0263574720001496.

- Dmytriyev, Y., et al. (2021) Brain computer interface for human-cobot interaction in industrial applications. In Proceedings of the 3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 11–13 June.