Feb 11 2019

How far will people be ready to show concern for robots? New research proposes that, under specific conditions, certain people are inclined to put human lives to risk—out of their concern for robots.

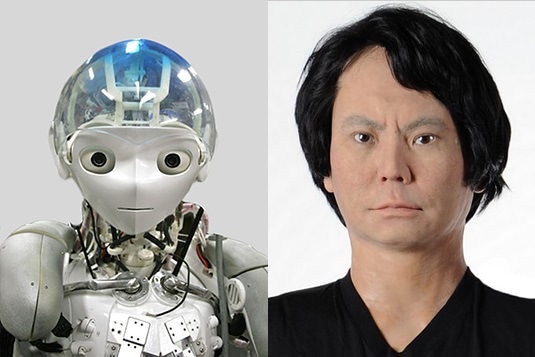

Do people react differently to robots if they perceive the latter as being human-like? In the study, human responses to simulated situations involving robots of varying appearance were investigated. (Image credit: University of Nijmegen/NL)

Do people react differently to robots if they perceive the latter as being human-like? In the study, human responses to simulated situations involving robots of varying appearance were investigated. (Image credit: University of Nijmegen/NL)

Currently, robots are being used not only for perilous tasks, such as the detection and disarming of mines. They are also being used as nursing assistants and as household helps. With a more number of machines, equipped with the most advanced artificial intelligence, taking on an increasingly diverse range of daily and specialized tasks, the question of how people understand them and behave toward them turns out to be more and more urgent.

A group of researchers headed by Sari Nijssen of Radboud University in Nijmegen in the Netherlands and Markus Paulus, Professor of Developmental Psychology at LMU, have performed a study to establish the degree to which people extend their consideration for robots and behave toward them based on moral principles. The outcomes of the study have been reported in the journal Social Cognition.

Sari Nijssen said that the aim of the research was to answer the following question: “Under what circumstances and to what extent would adults be willing to sacrifice robots to save human lives?” The participants faced a hypothetical moral dilemma: Will they intend to put a single individual at risk to save a group of injured persons? In the presented scenarios, the intended sacrificial victim was a humanoid robot with an anthropomorphic physiognomy that had been humanized to different degrees, a human, or a robot that was evidently recognizable as a machine.

The study showed that the extent to which the robot was humanized was indirectly proportional to the probability of participants sacrificing. Scenarios including priming stories where the robot was represented as a creature with its own experiences, perceptions, and thoughts or as a compassionate being were more probable to deter the study participants from sacrificing it to save anonymous humans. In fact, if they are informed of the emotional qualities purportedly displayed by the robot, several of the experimental subjects exhibited a readiness to sacrifice the injured humans to save the robot from harm.

The more the robot was depicted as human—and in particular the more feelings were attributed to the machine—the less our experimental subjects were inclined to sacrifice it. This result indicates that our study group attributed a certain moral status to the robot. One possible implication of this finding is that attempts to humanize robots should not go too far. Such efforts could come into conflict with their intended function—to be of help to us.

Markus Paulus, Professor of Developmental Psychology, Ludwig Maximilian University of Munich