Image Credit: The University of Hong Kong.

Conventional medical image diagnosis using AI algorithms, on the other hand, needs a huge number of annotations as supervision signals for model training. To obtain accurate labels for the AI algorithms, radiologists prepare radiology reports for each of their patients as part of their clinical routine, with annotation staff extracting and verifying structured labels from those findings utilizing human-defined regulations and existing natural language processing (NLP) tools.

The quality of human labor and various NLP techniques determine the eventual accuracy of extracted labels. The procedure is labor-intensive and time-consuming, hence it comes at a high cost.

By allowing the auto acquisition of supervision signals from hundreds of thousands of radiology data at the same time, an engineering team at the University of Hong Kong (HKU) has created a new method called “REFERS” (Reviewing Free-text Reports for Supervision), which can reduce human costs by 90%. It achieves a high level of prediction accuracy, outperforming its AI-assisted equivalent in conventional medical imaging diagnosis.

The new technique is a significant step forward in the development of generic medical artificial intelligence. The breakthrough was reported in the publication in Nature Machine Intelligence published.

AI-enabled medical image diagnosis has the potential to support medical specialists in reducing their workload and improving the diagnostic efficiency and accuracy, including but not limited to reducing the diagnosis time and detecting subtle disease patterns.

Professor Yizhou Yu, Team Leader, Department of Computer Science, Faculty of Engineering, The University of Hong Kong

Professor Yu stated, “We believe abstract and complex logical reasoning sentences in radiology reports provide sufficient information for learning easily transferable visual features. With appropriate training, REFERS directly learns radiograph representations from free-text reports without the need to involve manpower in labeling.”

The study team utilizes a public database to train REFERS, which contains 370,000 X-Ray images and related radiology reports on 14 common chest diseases such as atelectasis, cardiomegaly, pleural effusion, pneumonia and pneumothorax. With only 100 radiographs, the researchers were able to create a radiograph identification model that predicts with an accuracy of 83%.

When the quantity is increased to 1,000, their model performs exceptionally well, with an accuracy of 88.2%, outperforming a counterpart trained with 10,000 radiologist comments (accuracy at 87.6%). The accuracy is 90.1% when 10,000 radiographs are employed. In general, the prediction accuracy of more than 85% is useful in real-world clinical applications.

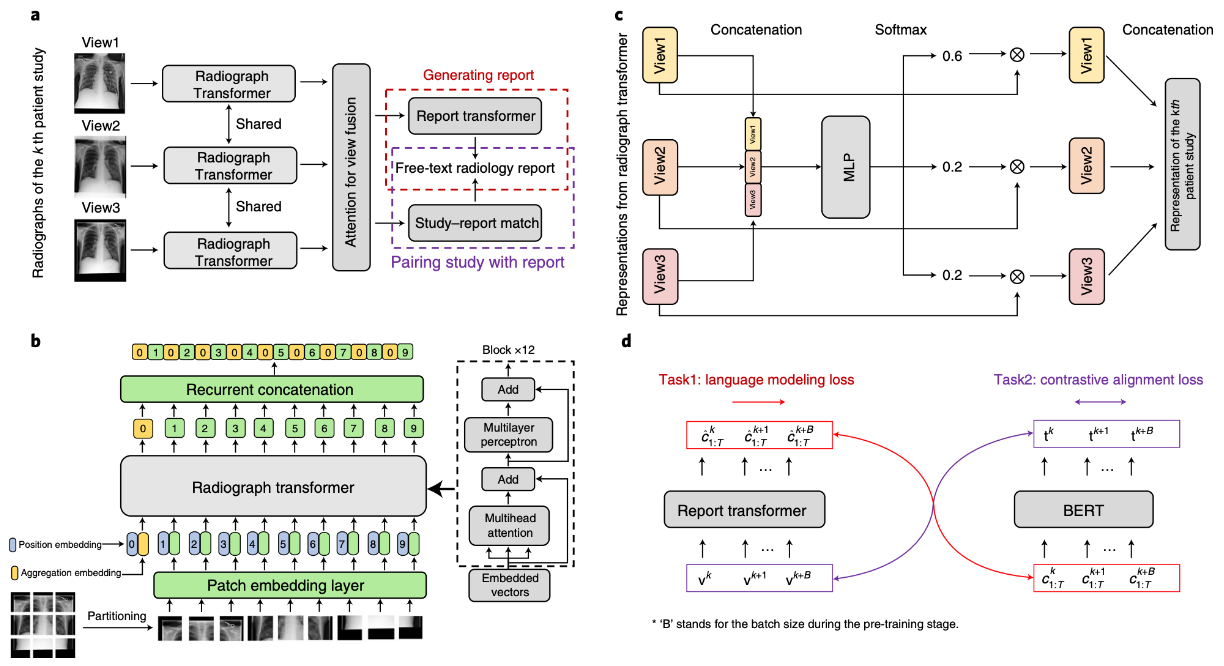

REFERS accomplishes this purpose by performing two report-related tasks: report production and radiograph–report matching. It converts radiographs into text reports in the first task by encoding radiographs into an intermediate representation, which is subsequently utilized to predict text reports using a decoder network.

Gradient-based optimization is used to train the neural network and update its weights, based on a cost function that measures the similarity between predicted and real report messages.

In the second task, REFERS initially encodes both radiographs and free-text findings into the same semantic space, where contrastive learning aligns the representation of each report and its related radiographs.

Compared to conventional methods that heavily rely on human annotations, REFERS has the ability to acquire supervision from each word in the radiology reports. We can substantially reduce the amount of data annotation by 90% and the cost to build medical artificial intelligence. It marks a significant step towards realizing generalized medical artificial intelligence.

Dr. Hong-Yu Zhou, Study First Author, Department of Computer Science, The University of Hong Kong

Journal Reference:

Zhou, H-Y., et al. (2022) Generalized radiograph representation learning via cross-supervision between images and free-text radiology reports. Nature Machine Intelligence. doi.org/10.1038/s42256-021-00425-9.