Image Credit: Queensland University of Technology

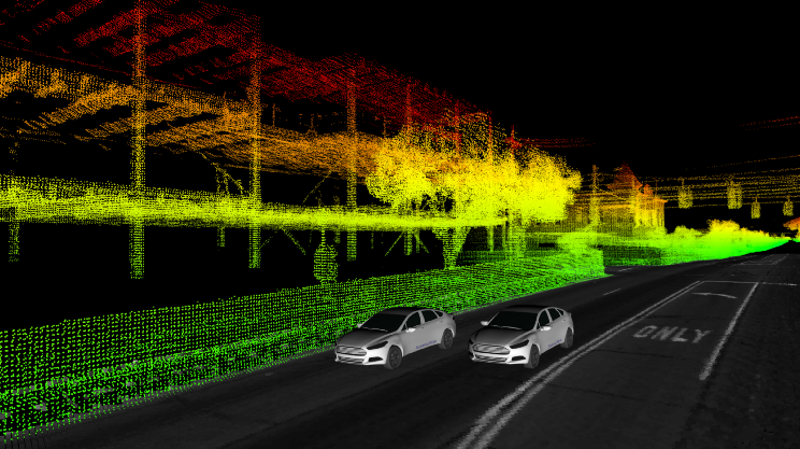

Senior author of the study and Australian Research Council Laureate Fellow Professor Michael Milford said the study is the result of an investigation into how cameras and LIDAR sensors, which are frequently used in autonomous cars, can better perceive their surroundings.

The key idea here is to learn which cameras to use at different locations in the world, based on previous experience at that location. For example, the system might learn that a particular camera is very useful for tracking the position of the vehicle on a particular stretch of road, and choose to use that camera on subsequent visits to that section of road.

Michael Milford, Study Senior Author and Joint Director, Centre for Robotics, Queensland University of Technology

The project is being led by Dr. Punarjay (Jay) Chakravarty on behalf of the Ford Autonomous Vehicle Future Tech group.

Autonomous vehicles depend heavily on knowing where they are in the world, using a range of sensors including cameras. Knowing where you are helps you leverage map information that is also useful for detecting other dynamic objects in the scene. A particular intersection might have people crossing in a certain way.

Dr. Punarjay Chakravarty, Ford Autonomous Vehicle Future Tech group, Ford Motor Company

Chakravarty added, “This can be used as prior information for the neural nets doing object detection and so accurate localization is critical and this research allows us to focus on the best camera at any given time. To make progress on the problem, the team has also had to devise new ways of evaluating the performance of an autonomous vehicle positioning system.”

We are focusing not just on how the system performs when it’s doing well, but what happens in the worst-case scenario.

Dr. Stephen Hausler, Joint Lead Researcher and Research Fellow, Centre for Robotics, Queensland University of Technology

The purpose of this study was to examine how cameras and LIDAR sensors, which are frequently employed in autonomous cars, could help them comprehend their surroundings better. It was a part of a larger fundamental research effort by Ford.

The study was just published in the IEEE Robotics and Automation Letters journal, and it will also be presented at the next IEEE/RSJ International Conference on Intelligent Robots and Systems in Kyoto, Japan, in October 2022.

Punarjay Chakravarty, Shubham Shrivastava, and Ankit Vora from Ford worked along with researchers from QUT Stephen Hausler, Ming Xu, Sourav Garg, and Michael Milford.

Journal Reference:

Hausler, S., et al. (2022) Improving Worst Case Visual Localization Coverage via Place-Specific Sub-Selection in Multi-Camera Systems. IEEE Robotics and Automation Letters. doi:10.1109/LRA.2022.3191174.