Image Credit: Max Planck Institute

To better understand how AI will choose to appear to humans, a group of international researchers—among them Dr. Edmond Awad from the University of Exeter—have started a new “citizen science” study.

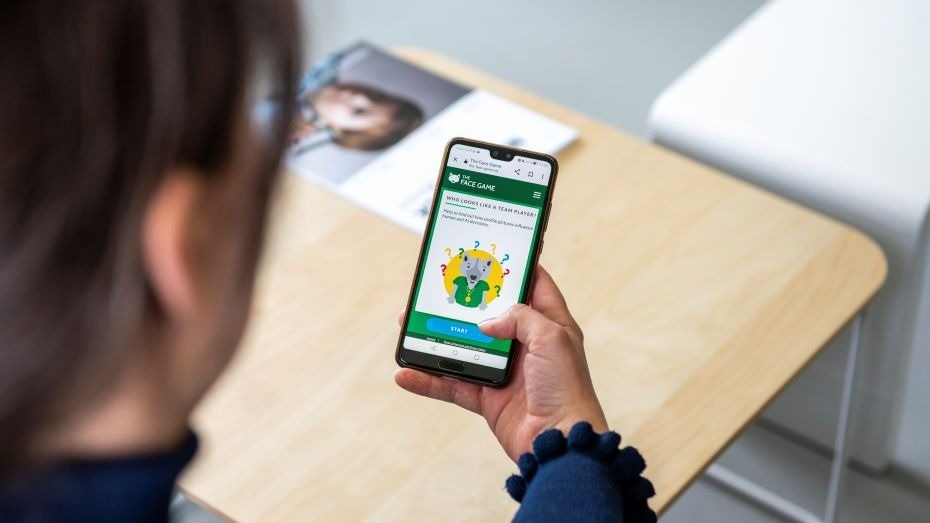

The Face Game is an online initiative where users rate profile pictures based on whether they appear to be more “self-seeking” or “team players” to compete against AI systems.

The objective of the game is to better understand how AI will come to select various facial expressions depending on the impression it wants to make and the person it is interacting with.

The Toulouse School of Economics, the University of Exeter, the University of British Columbia, the Universidad Autonoma de Madrid, and the Université Paris Cité are participating in the online experiment under the direction of researchers from the Max Planck Institute for Human Development.

This game provides a valuable tool for examining on a worldwide scale how individuals engage with each other and AI algorithms through the use of profile pictures.

Dr. Awad, Senior Lecturer, Department of Economics and Institute for Data Science and Artificial Intelligence, University of Exeter

The Face Game is intended to aid researchers in their exploration of the future of digital interactions with AI. Profile pictures are widely used on social media and other online platforms, and they play an important part in establishing the first impression that is established on others.

Currently, AI provides users with the digital tools they need to change their online picture in any way they want, such as looking younger.

However, AI is not only assisting humans in playing this ‘face game’ amongst themselves, but it is also learning the game from humans and discreetly determining which face it will display when dealing with them.

As we increasingly come across AI replicants with self-generated faces, we need to understand what they learn from observing us play the face game and ensure that we retain control over how we interact with these digital entities.

Iyad Rahwan, Director, Center for Humans and Machines, Max Planck Institute for Human Development

His research center investigates ethical issues surrounding AI and the concept of Machine Behavior.

This project is the brainchild of the same team who created the Moral Machine, a big online experiment that went viral in 2016.

It looked into the ethical quandaries that autonomous vehicles face, stressing universal principles as well as cross-cultural disparities in how people expect AI to act. The findings were published in prestigious journals such as Science and Nature.

The Face Game, created by Universidad Autonoma de Madrid researchers, is based on multimodal AI technologies such as human behavior monitoring with discrimination-aware machine learning and realistic synthetic face images.

Journal References

Bonnefon, J.-F., et al. (2016) The social dilemma of autonomous vehicles. Science. Doi:10.1126/science.aaf2654.

Awad, E., et al. (2018) The Moral Machine experiment. Nature. doi:10.1038/s41586-018-0637-6.