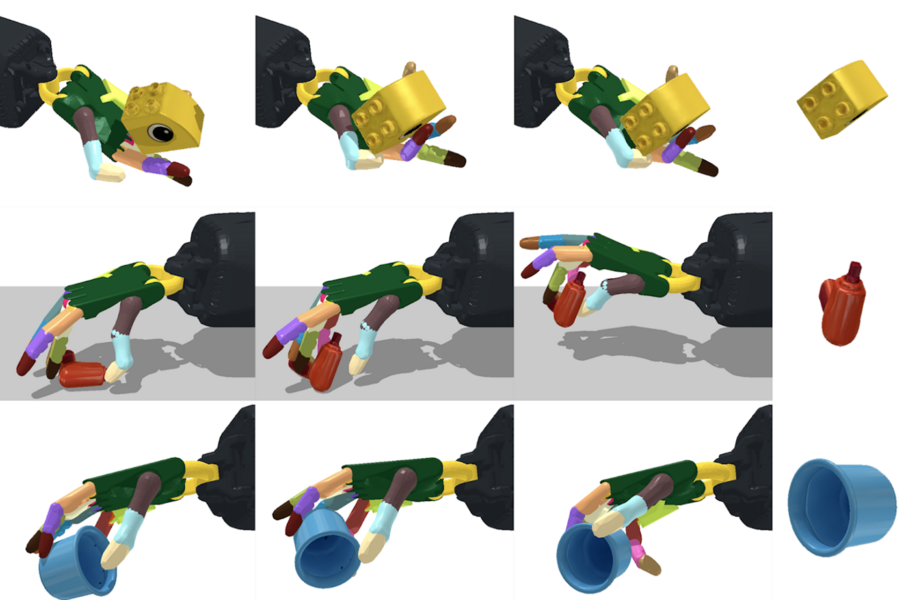

We present a system for re-orienting novel objects using an anthropomorphic robotic hand, with any configuration, such as the hand facing upwards and downwards. Our system is capable of re-orienting a diverse set of objects with novel shapes. Our paper won the best paper award at the Conference on Robot Learning (CoRL) 2021.

Imagine a home robot in the future. A necessary capability of the robot is its ability to use different tools. This motivates the goal of our research: to use the tool, first, the robot needs to pick it up and then re-orient it in the right way. For example, after picking up a knife, we change the way we grasp it in order to cut. Our goal was to enable such re-orientation for arbitrary objects, albeit in simulation for now.

In terms of robotics, why is in-hand object re-orientation so challenging?

In-hand object re-orientation has been a challenging problem in robotics. This is due to the large number of motors that require control, and the frequent change in contact state between the fingers and the objects.

Teaching Robots Dexterous Hand Manipulation

Video Credit: YouTube/ MITCSAIL

How does the weight of the object affect the results?

During training, the weight of the objects is uniformly randomly sampled from 50 g to 150 g. During the testing, we found that when the object weight is beyond 150 g, the success rate goes down as the object weight increases. This is expected as the generalization of distribution data is still a challenging problem in machine learning. On the other hand, the task also becomes more challenging as the weight increases. Just imagine using our hand to re-orient a pen with a weight of 100 g versus a pen with a weight of 1000 g when the hand faces downward.

What makes re-orientation with downward-facing hands challenging?

Re-orientation with downward-facing hands is challenging because objects can fall easily — in addition to re-orienting the object, the hand also needs to stabilize it. In the upward-facing hand case, the palm or fingers provide a supporting force that prevents an object from falling. However, there is no such direct supporting force from the hand when it faces downwards.

A new system can re-orient over 2,000 different objects, with the robotic hand facing both upwards and downwards. Image Credit: MIT CSAIL.

What were some of the testing processes that were involved in this research?

We test the learning capabilities on re-orienting objects (1) on the same training object dataset, (2) on the same training object dataset but with noise in the environmental dynamics that can result from an inaccurate estimation of object state, friction in motors, etc; and (3) a test set of objects that were held out during training.

The first test is to investigate how well the policy learns to re-orient the objects that have been trained with. The second test is to see how robust the policy is to unknown dynamics. This reflects the real-world scenario as we can not know the exact dynamics of the real-world environments, such as the friction model. The third test is to see how well the policies generalize to out-of-distribution objects from the perspective of geometric shapes.

Why was it so important to perform this research with differing hand orientations?

Let's again imagine we have a robot assistant in our home. Unlike specially designed factory environments, home environments are unstructured, where objects can be anywhere and in any position or orientation.

When the robot uses tools such as a plier, its hand can be in any orientation. While one can say that we can let the robot re-orient its own hand such that it always faces upward first before re-orienting the tool to its desired orientation, this makes the robot look unnatural and cumbersome. More importantly, this is much less efficient in terms of time.

What are some typical daily tasks that this research could help with?

Our learned skill (in-hand object re-orientation) can enable fast pick-and-place of objects in desired orientations and locations. For example, it is a common demand to pack objects into slots for kitting in logistics and manufacturing. Currently, this is usually achieved via a two-stage process involving re-grasping. Our system will achieve it in one step, which can substantially improve the packing speed and boost manufacturing efficiency.

Another application is enabling robots to operate a wider variety of tools. The most common end-effector in industrial robots is a parallel-jaw gripper, partially due to its simplicity in control. However, such an end-effector is physically unable to handle many tools we see in our daily life. For example, even using a plier is difficult for such a gripper as it cannot dexterously move one handle back and forth. Our system will allow a multi-fingered hand to dexterously manipulate such tools, opening a new area for robotics applications.

What are some medical conditions that the findings of your research potentially help with?

Our research can be expanded into general tool use scenarios. For example, our system can help with fine tool pose adjustment in surgery, more flexible prescription packaging, robotic massage, etc.

What is next for the team at MIT?

Our immediate next step is to achieve such manipulation skills on a real robotic hand. To achieve this, we will need to tackle many challenges. We will investigate overcoming the sim-to-real gap such that the simulation results can be transferred to the real world. We also plan to design new robotic hand hardware through collaboration such that the entire robotic system can be dexterous and low-cost.

Where can readers find more information?

Your readers can find more information on our project website: https://taochenshh.github.io/projects/in-hand-reorientation

The research is funded by Toyota Research Institute, Amazon Research Award, and DARPA Machine Common Sense Program. It will be presented at the 2021 Conference on Robot Learning (CoRL).

About Tao Chen

Tao Chen is a Ph.D. student in the Department of Electrical Engineering and Computer Science at MIT, advised by Prof. Pulkit Agrawal. While he has worked in many different fields in robotics, including navigation, planning, and locomotion, his current research focuses on dexterous manipulation. He received his master's degree from the Robotics Institute at CMU and his bachelor's degree from Shanghai Jiao Tong University. His research papers have been highlighted at top AI and robotics conferences.

Tao Chen is a Ph.D. student in the Department of Electrical Engineering and Computer Science at MIT, advised by Prof. Pulkit Agrawal. While he has worked in many different fields in robotics, including navigation, planning, and locomotion, his current research focuses on dexterous manipulation. He received his master's degree from the Robotics Institute at CMU and his bachelor's degree from Shanghai Jiao Tong University. His research papers have been highlighted at top AI and robotics conferences.

About Pulkit Agrawal

Dr. Pulkit Agrawal is the Steven and Renee Finn Chair Professor in the Department of Electrical Engineering and Computer Science at MIT. He earned his Ph.D. from UC Berkeley and co-founded SafelyYou Inc.

Dr. Pulkit Agrawal is the Steven and Renee Finn Chair Professor in the Department of Electrical Engineering and Computer Science at MIT. He earned his Ph.D. from UC Berkeley and co-founded SafelyYou Inc.

His research interests span robotics, deep learning, computer vision and reinforcement learning. Pulkit completed his bachelor's from IIT Kanpur and was awarded the Director's Gold Medal. He is a recipient of the Sony Faculty Research Award, Salesforce Research Award, Amazon Machine Learning Research Award, Signatures Fellow Award, Fulbright Science and Technology Award, Goldman Sachs Global Leadership Award, OPJEMS, and Sridhar Memorial Prize, among others.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.